In recent years unmanned aerial vehicles (UAVs), commonly known drones, have advanced in various aspects, including: hardware technologies, autonomous manoeuvre and computational power. Consequently, drones have become more commercially available. Combining this with the increasing application of artificial intelligence (AI) allows for increasing the number of applications of UAVs.

In this project, drones, image processing and object recognition were merged together with the aim of creating a personal assistant. However, this is not just any virtual personal assistant (PA), such as Alexa, Siri or Google Assistant, but an assistant that would communicate through sign language[1]. Such a PA would benefit the hearing-impaired community, which constitutes over 5% of the world’s population and amounts to 466 million individuals.

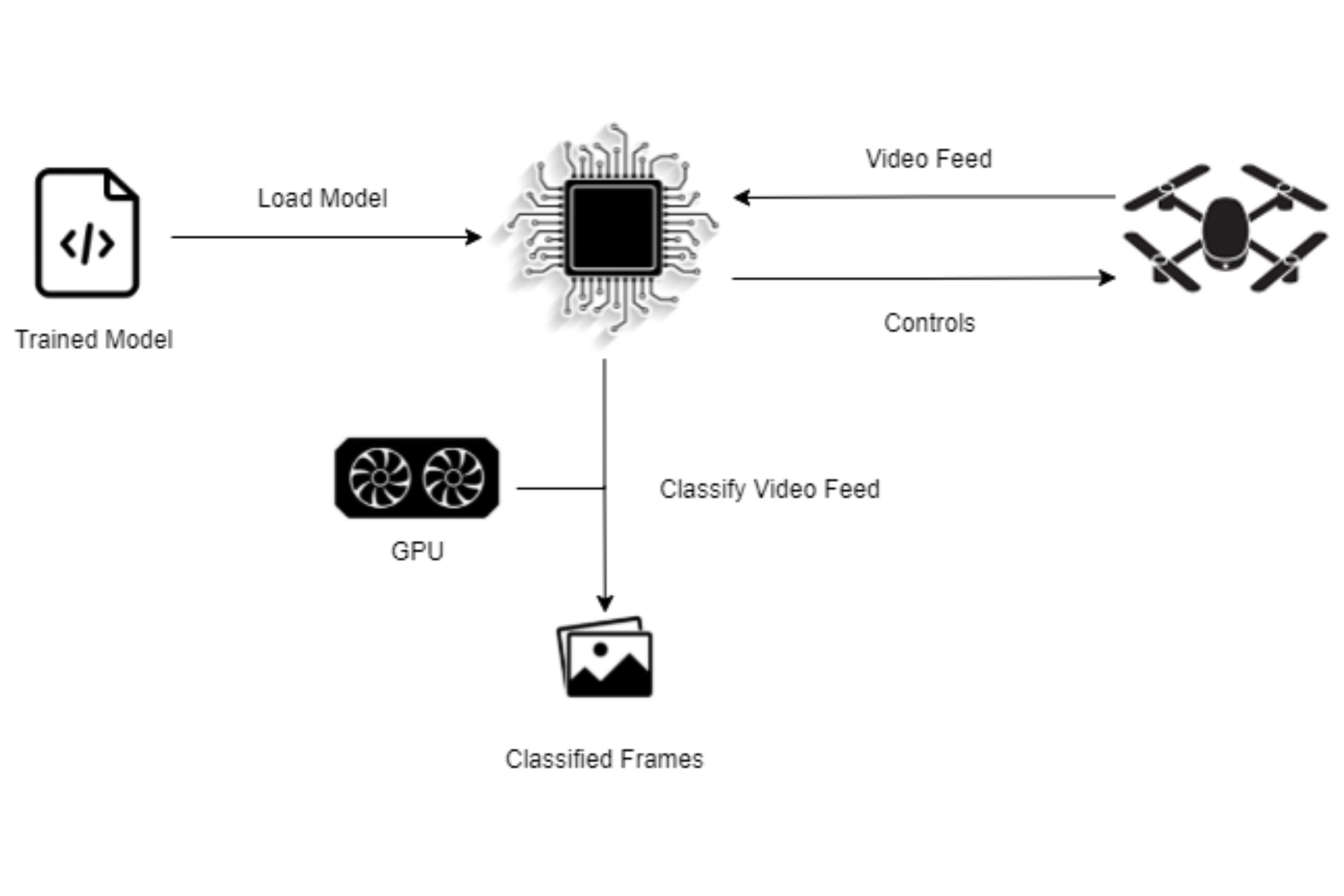

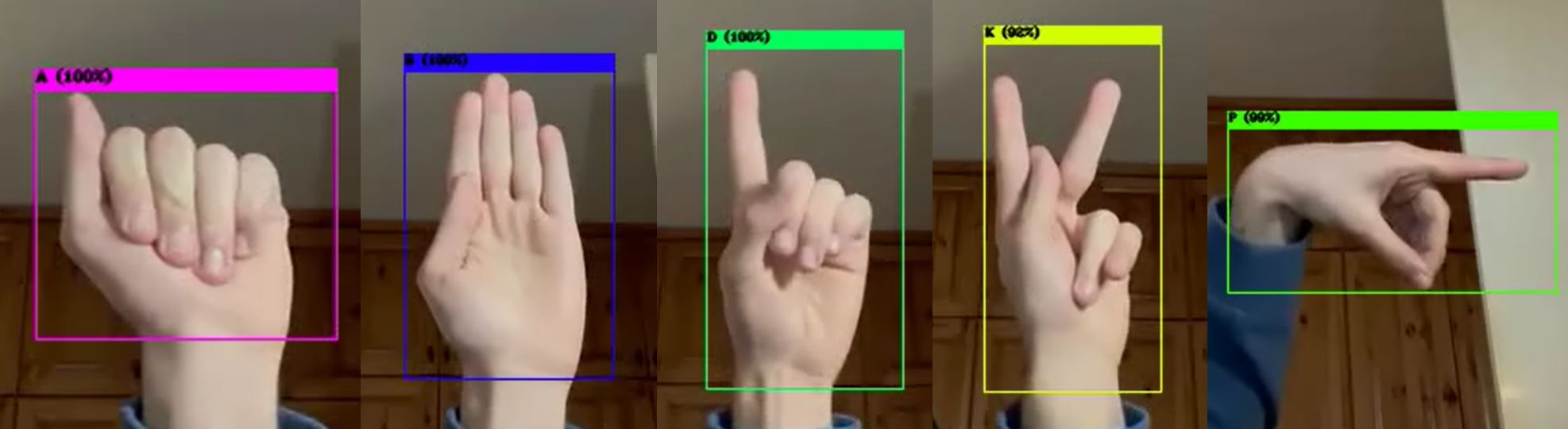

Sign language [2] is a visually transmitted language that is made up of sign patterns constructed together to form a specific meaning. Due to the complexities of capturing and translating sign language digitally, this research domain has not been able to adequately compare with the advanced speech recognition available nowadays. Hence, this project attempted to combine the use of drones, following the user closely, and entering the frame of hand gestures. The project also incorporated object recognition for sign language characters of the American Sign Language (ASL).

In practical terms, the drone would follow the user closely, allowing them to spell out a word, letter by letter, forming a word referring to an object. The drone would then pivot while scanning the area for the object sought by the user [3]. Should the object be found, the drone manoeuvres itself towards the object.

The drone that was used in this project is a Tello EDU which, in spite of having a limiting battery life of around 13 minutes, it allows Python code to be used as means of control.

References/Bibliography

[1] A. Menshchikov et al., “Data-Driven Body-Machine Interface for Drone Intuitive Control through Voice and Gestures”. 2019.

[2] C. Lucas and C. Valli, Linguistics of American Sign Language. Google Books, 2000.

[3] Nils Tijtgat et al., “Embedded real-time object detection for a UAV warning system”. In: vol. 2018-January. Institute of Electrical and Electronics Engineers Inc., July2017, pp. 2110–2118.

Course: B.Sc. IT (Hons.) Artificial Intelligence

Supervisor: Prof. Matthew Montebello