Given its widespread presence in the area of processing, and the lower storage costs it promises, cloud computing enjoys considerable interest in a very connected society. Considering the ever-increasing volume of data and the extension of data-access to remote users, this technology has also become attractive to digital forensic investigators, as it facilitates the storing and analysing of evidence data from ‘anywhere’.

While proving to be highly beneficial to forensic investigators, the nature of the cloud also presents new difficulties. Since data resides on external and also distributed servers, it becomes challenging to ensure that the acquired data remain uncompromised. This project investigates whether modern encryption algorithms could be seamlessly integrated in a workable system by securing the data in a database management system (DBMS), and ensuring that the evidence would not be altered during transfer, and when it is stored in the cloud. The performance of the encryption algorithms was also evaluated to test to what extent the performance of basic update operations could be affected.

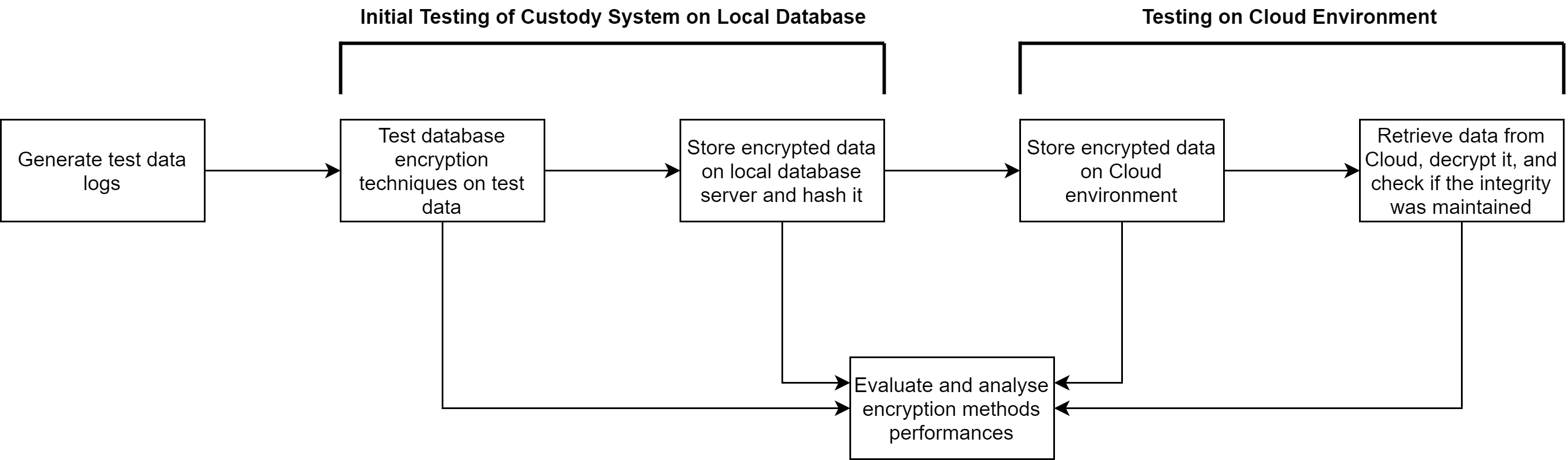

The approach taken was to first generate test data and store it in a custody database. Two databases were built: one that was encrypted and one that was not. The security features that were used to keep the one of the databases secure were: encryption, auditing, role separations, and hashing. The two databases were then stored on a cloud environment where the performance was evaluated during testing. The quantitative performance was analysed and tested to compare with the encrypted version to analyse how far encryption would affect performance and whether it would burden updating activities.

The data was also protected from access by database administrators to mitigate the risk of an insider attack. The ‘Always Encrypted’ feature provided by Microsoft SQL Server was used to encrypt data at rest without having to reveal the encryption keys to the database engine. This resulted in a separation between those who own the data, thus having access to it, and those who manage the data but are not granted access.

The custody system must be able to describe the continuum of the evidence (that is: from where the evidence would have been retrieved, the operations performed on it, proof of adequate handling, and justified actions performed on the evidence item. It was crucial to maintain the chain of custody of the digital evidence throughout the investigation by documenting every stage of the forensic process. This was achieved by making use of the SQL Server’s auditing feature, which involved tracking and logging events that might occur in the database. The audits created for the custody system were: failed login attempts, changes to logins, user changes, schema changes, and audit changes. The logs generated from these events made it possible to maintain evidence provenance, thus helping to answer important questions necessary to the chain of custody, such as what and when an audit record has been generated.

Furthermore, a clear separation of concerns would be required in the custody system to prevent changes in the audit records, whilst also avoiding overlapping functional responsibilities. An explicit forensic role and a corresponding forensic database were created to prevent discretionary violations of administrative functions, such as disabling auditing mechanisms.

Course: B.Sc. IT (Hons.) Software Development

Supervisor: Dr Joseph G. Vella