Machine learning – a very important field in computer science – is utilized in many scientific domains and an ever-widening range of human activities. Its main objectives are to enable a machine to learn from past data, to construct accurate predictive models, and to apply these models to a variety of problems, such as classification. This ability has proven to be very effective in a variety of applications, such as in healthcare and business [1]. One of the most important factors that determines if a Machine learning algorithm is successful in building a good predictive model or not, is the data available for analysis. Due to the enormous growth in computer applications and communications, nowadays we are seeing a shift from having a limited amount of available data to more data that we can store, analyse and process [2][3][4].

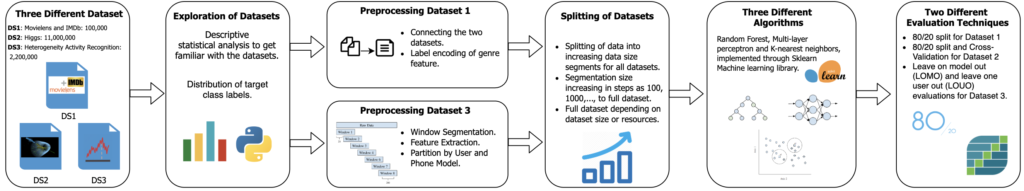

The main aim of this study was to investigate the effect of increasing the dataset size given to a machine learning algorithm. This was seen through a series of purposely designed experiments based on a selection of datasets, machine learning algorithms, preprocessing and evaluation

techniques. Each dataset used was split up into a number of increasing segment dataset sizes, each of which was analysed and evaluated in terms of accuracy, costs and other perspectives.

Each experiment yielded a range of results which led to a set of conclusions of interest. As expected, when increasing the dataset size, the costs (such as the time taken to train the machine learning algorithms and the associated processing power needed) increased. However, the performance of the machine learning algorithms did not always increase as the dataset size grew.

Furthermore, the application of different machine learning algorithms, preprocessing and evaluation techniques used, had an important effect on the performance and costs when increasing the dataset size. When dealing with a substantially large dataset, it might be beneficial to analyse and scale up from a smaller sample size. This might lead to a desirable outcome without going for the larger dataset size, hence reducing the time and resources required for the analysis.

References

[1] Nayak, A., & Dutta, K. Impacts of machine learning and artificial intelligence on mankind. 2017 International Conference on Intelligent Computing and Control (I2C2), Coimbatore, 2017, pp. 1-3.

[2] Dalessandro, B. (2013). Bring the Noise: Embracing Randomness Is the Key to Scaling Up Machine Learning Algorithms. Big Data, 1(2), 110-2.

[3] Joy, M. (2016). Scaling up Machine Learning Algorithms for Large Datasets. International Journal of Science and Research (IJSR), 5(1), 40–43. [4] Yousef, W. A., & Kundu, S. (2014). Learning algorithms may perform worse with increasing training set size: Algorithm-data incompatibility. Computational Statistics and Data Analysis, 74, 181–197.

Student: Clayton Agius

Supervisor: Dr Michel Camilleri

Co-Supervisor: Mr Joseph Bonello

Course: B.Sc. IT (Hons.) Software Development