The Fourth Industrial Revolution (or Industry 4.0) has seen widespread adoption of the Internet of Things (IoT) concept by various industries attempting to optimise logistics and advance supply chain management. These large-scale systems, capable of gathering and analysing vast amounts of data, have come to be known as the industrial internet of things (IIoT).

This study aims to create a scalable tangible-resource allocation tool capable of learning and forecasting resource distributions and handling allocation biases caused by preemptable events. Furthermore, the system should be capable of proposing suitable reallocation strategies across a predefined number of locations.

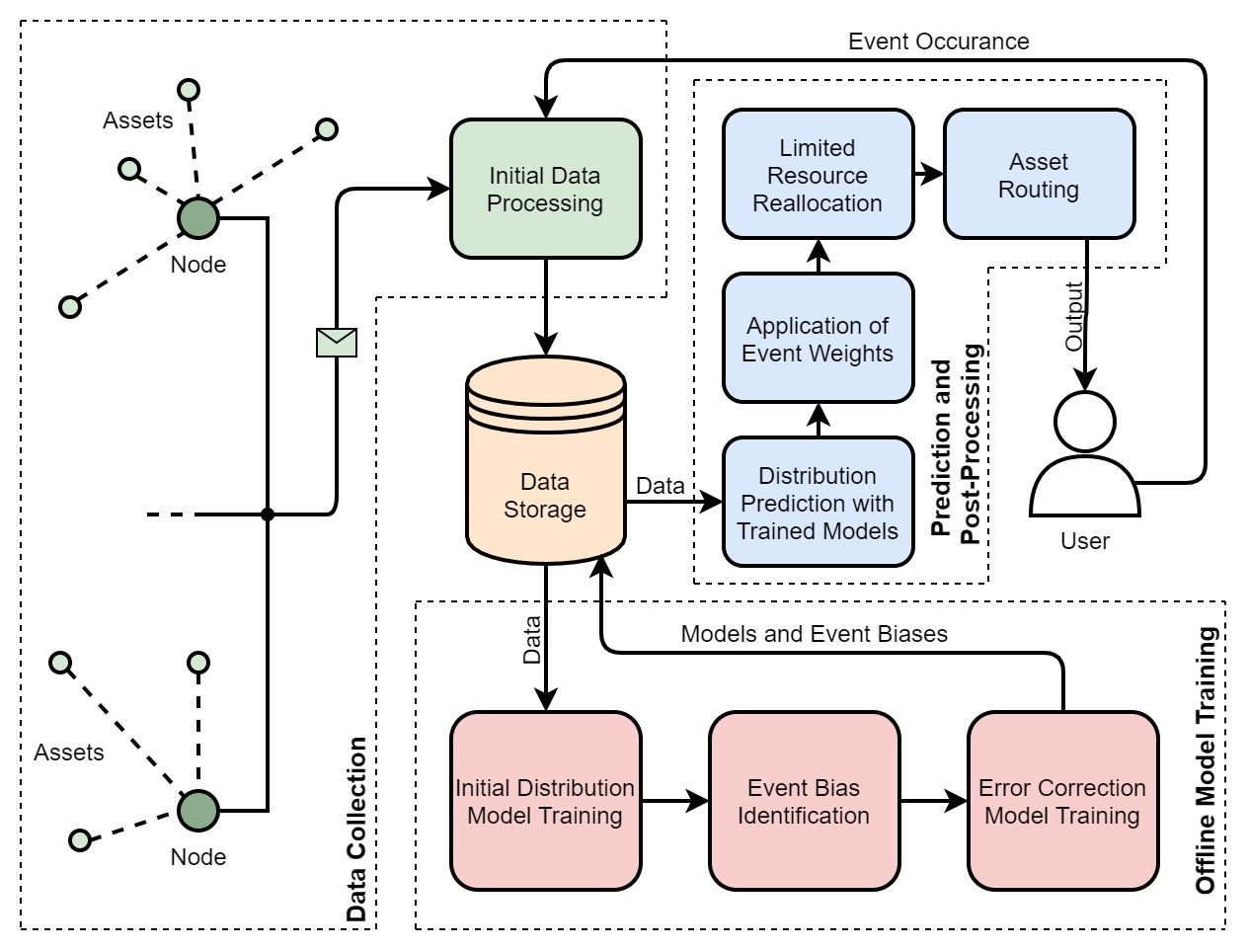

The physical framework would assume the form of a low-cost resource-tracking platform similar to that seen in the data-collection component of the accompanying diagram. At each location, a node would keep track of the number of items available and items in use, with data being sent periodically to a central server, together with any registered events. However, this goes beyond the purpose of the study and was not pursued further.

In this study, real-world datasets and generated synthetic data were used in order to evaluate the system’s performance, representing the data-collection component of the underlying framework.

In the predictive resource-allocation space models based on long short-term memory (LSTM) [1] and, more recently, gated recurrent unit (GRU) [2] have become popular with state-of-the-art implementations.

The core implementation proposed in this research investigates a cascaded dual-model approach. Taking raw data as input, the first model was trained to generate an initial resource-requirement forecast for each of the predefined locations, assuming spatial dependency. The second model was then trained to predict the error between the distribution predicted by the first model and the true values. Using varied combinations of bi-directional LSTM and GRU layers, the cascaded dual-model approach indicated significant improvements compared to standard LSTM and GRU implementations in initial evaluations. The system would then handle prediction distributions for intervals in which relevant preemptable events with limited sample data and no identifiable time-series correlations could cause highly discernible data variation. Depending on the application space, such events could include concerts, public holidays or an incoming influx of patients from a major accident.

Despite the trained models being capable of augmentation for the generation of long-term allocation forecasts, the proposed system was evaluated on short-term predictions generated using a one-step lookahead. Once the event biases affecting the initial prediction had been handled, further post-processing would adjust the final allocation across the various nodes, depending on the importance of each location and the availability of limited resources using the forecasted requirements. Using a priority-based double-standard repositioning model [3] the system would then suggest a resource-relocation strategy. This approach preemptively moves resources as per the final prediction. Should the number of resources at prediction not be enough to satisfy the forecasted allocation requirement for each node, the system would maintain a queue for moving resources as soon as they become available.

References/Bibliography

[1] S. Hochreiter and J. Schmidhuber, ‘Long Short-Term Memory’, Neural Computation, vol. 9, no. 8, pp. 1735–1780, Nov. 1997, doi: 10.1162/neco.1997.9.8.1735.

[2] K. Cho et al., ‘Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation’, arXiv:1406.1078 [cs, stat], Sep. 2014, Accessed: May 10, 2021. [Online]. Available: http://arxiv.org/abs/1406.1078

[3] V. Bélanger, Y. Kergosien, A. Ruiz, and P. Soriano, ‘An empirical comparison of relocation strategies in real-time ambulance fleet

Course: B.Sc. IT (Hons.) Artificial Intelligence

Supervisor: Prof. Matthew Montebello