The aim of this research project was to develop a deeper understanding of hand-gesture recognition for the manipulation of hologram objects, and hinges on the human-computer interaction (HCI) for an augmented user experience. This could have a wide range of uses across various fields and domains, such as in cultural heritage and museum visits.

Virtual reality (VR) and augmented reality (AR) systems are already being considered when seeking to enhance the museum experience. Holographic displays are also being used to create immersive user experiences. However, with a lack of interaction, the novelty of the display would soon wear off, resulting in a limited user experience. Hand-gesture techniques and holographic object manipulation is an emerging research field in artificial intelligence (AI) and employs novel computer-vision techniques and technologies, such as: machine learning algorithms, deep learning and neural networks (NNs), feature extraction from images and intelligent interfaces.

By evaluating existing hand-gesture recognition techniques and determining the optimal method, a system that is highly efficient and accurate could be produced to achieve the goal of a more immersive and interactive user experience. Therefore, this study set outs to take a new approach to HCI, in which it is a very natural interaction and almost simulates a completely new way of how society should plan both museums and educational sites.

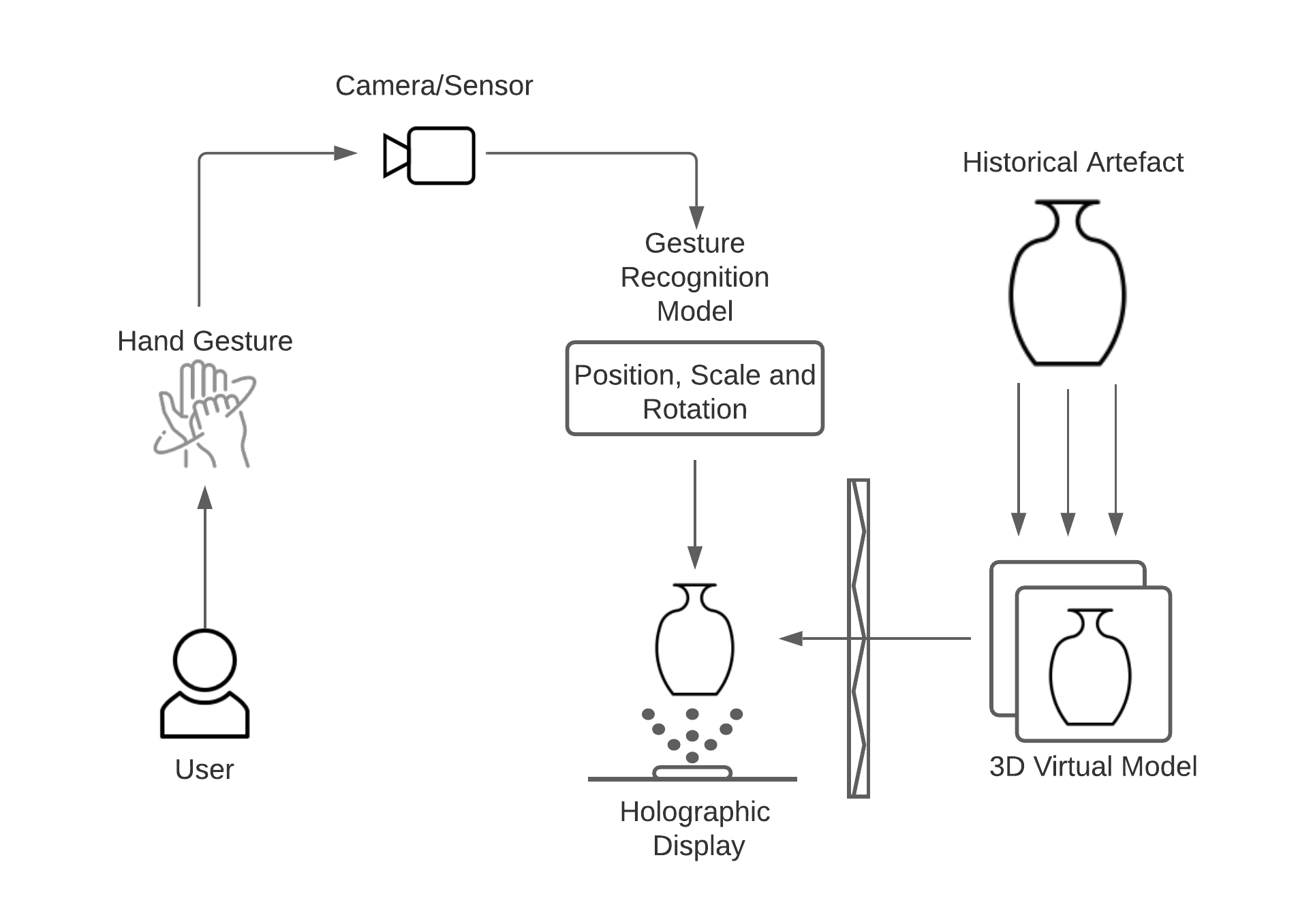

For this project, hand gestures were captured by the camera using the hand-in-frame perspective, passing it onto the next stage of the system. The features of this hand-in-frame were then extracted and used to make a prediction of what the hand-gesture being shown is. The prediction is achieved by designing a dataset from scratch, containing samples that vary in distance from camera, hand size and other physical features. This dataset was then used to train the NN by feeding in the samples as normalised coordinates to represent the hand position in each captured frame. The NN contained a number of hidden layers that were involved in the training process, resulting in a hand-gesture prediction model. The final NN layer output a normalised level of confidence for each possible gesture, and a prediction is made if the highest confidence level is above a predefined threshold.

The hand gesture is used to manipulate the 3D model of the historical site/artefact shown as a hologram, leading to enhanced user interaction. Such interactions may include lateral shifts, rotations, zooming in and out – all of which would be dependent on the hand-gesture itself and the direction of motion of the hand. This is rendered in real time, with an immediate and visible result shown to the user.

The experiment results indicated that the model could accurately and effectively translate designated human hand gestures into functional commands to be implemented by the system, after undergoing several training stages. Training data for this NN model covered a wide variety of hand sizes, proportions and other physical features. This ensures that the model would not be limited to just responding to the hands of specific users, thus making the entire system accessible to anyone wishing to use it.

Course: B.Sc. IT (Hons.) Artificial Intelligence

Supervisor: Dr Vanessa Camilleri

Co-supervisor: Mr Dylan Seychell