Trading on the stock market is a risky endeavour, with most inexperienced traders making a loss on their investment. A good trading strategy plays a critical role when investing, but it is not easy to design a profitable strategy in such a complex environment. Hence, this work investigated the use of reinforcement learning (RL) in producing reliable strategies for making money when trading assets.

RL is among the most popular AI (artificial intelligence) approaches being adopted today. It works by learning from the data it is given, and subsequently building a strategy for different situations. RL is known for its ability to perform well on unseen scenarios, giving it an advantage in trading stocks when compared to other algorithms.

This project made use of five state-of-the-art RL algorithms, namely: Advantage Actor-Critic (A2C); Proximal Policy Optimization (PPO); Deep Deterministic Policy Gradient (DDPG); Twin Delayed Deep Deterministic Policy Gradient (TD3); and Soft Actor-Critic (SAC). To ensure that each of these algorithms performed optimally, the Optuna Python package was used for hyperparameter optimisation. This process tuned the algorithms to maximise two values, these being the ratio of profitable to non-profitable trades and the Sharpe ratio. The latter is a mathematical method for evaluating risk for investment strategies by comparing the return on investment to that achieved by a no-risk approach.

The process of training the RL algorithms incorporated the use of another machine learning technique ‒ principal component analysis (PCA). This is a dimensionality reduction algorithm that was used for reducing the number of input parameters. Using the PCA algorithm on a list of 18 unique technical indicators diminished the list to a mere 6, thus significantly reducing the number of parameters used for training without losing information. The technical indicators used in this project were calculated for 29 different publicly traded companies from 2010 to 2021, with the period from 2017 till the end of 2021 used for backtesting.

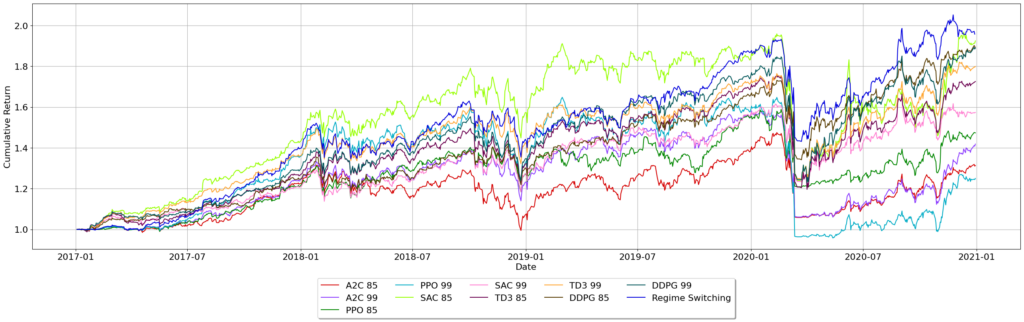

Each algorithm used in this project works slightly differently, making them better suited to modelling certain situations over others. For this reason, this project employed a regime-switching approach, whereby the algorithm being used at any given point in time would be dependent on some evaluation criteria. The Sharpe ratio was used for this purpose. By setting a window after which the algorithms would all be retrained and re-evaluated, it would be possible to switch between the algorithms, depending on what the market would dictate. The accompanying graph compares the performance of this approach with that of using any of the other algorithms. As indicated, the regime-switching approach tended to outperform the other algorithms, with an increase in cumulative returns of 7.6% compared to the second-best performing model.

Lastly, the developed trading strategy was incorporated into an automated trading bot. This was done by connecting the strategy to an online brokerage, where the decisions it made would be translated into buy-and-sell requests. This final model achieved a cumulative return of 95.7% return over the 5 year out-of-sample period from 2017 to 2021.

Figure 1. Cumulative return achieved over the period from 2017 till the end of 2021.

Student: Max Matthew Camilleri

Supervisor: Dr Josef Bajada