Light field imagery has become a popular field in computer vision due to the increasing accessibility of both hardware and software available for capturing and rendering. The modern light field representation consists of four dimensions, which are the 2D spatial dimensions, and the 2D angular dimensions. The 2D angular dimensions describe the direction of the light rays received, while the 2D spatial dimensions describe their intensity values. Alternatively, it can be explained as having multiple views of the same scene from different viewpoints. Compared to traditional photography, the addition of the angular direction of the light rays allows several features to be extracted from the scene, such as volumetric photography, video stabilisation, 3D reconstruction, refocusing, object tracking, and segmentation. Until recently, capturing light fields using traditional methods had proven difficult and cost prohibitive due to the use of multiple stacked cameras. Technological advancements have led to the Plenoptic camera, which makes use of a micro-lens array between the sensor and lens of a traditional camera to capture the individual light rays. This progress has led to the commercialisation of light fields, with industrial hardware solutions being used for microscopy, video capture, and high-resolution imagery [1].

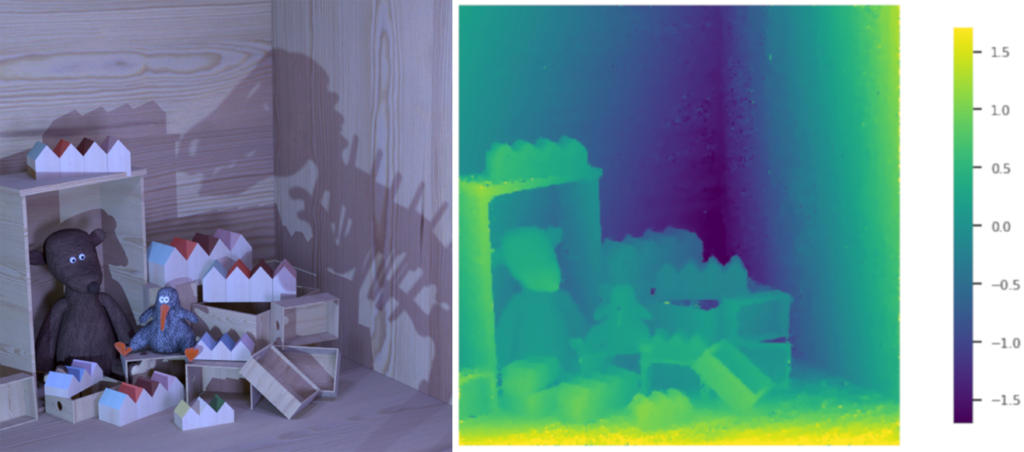

The basis for the majority of solutions offered by the light field relies on extracting the depth information to function. Challenging difficulties remain in spite of technological progress. To capture these additional viewpoints, there is a trade-off in the image quality due to the lower resolution of each view. Additionally, due to the increased dimensionality, there is a significant increase in computational complexity for processing this data. This project aims to make use of the epipolar geometry of light fields to obtain the depth information. Depending on the depth of the object, the disparity between views can be minimal if the object is further from the observer or significant if the object is closer to the observer [2]. By stacking horizontal lines from the horizontal views, or vice-versa for vertical lines and views, one can see the movement of objects across the views as lines. By taking the angle that these lines make with the axis, the depth information can be extracted.

The goal is to have more accurate depth estimation around the edges of objects set at different depths. The theory behind this is based on the assumption that vertical edges are defined better when considering the vertical views, and vice-versa for the horizontal views since other objects do not occlude them. The results are compared using the HCI benchmark [3], which provides synthetic real-life scenarios along with their true depth as well as several measurements targeted for light field depth estimation algorithms.

References

[1] RayTrix. [Online]. Available: https://raytrix.de/.

[2] R. C. Bolles, H. H. Baker and D. H. Marimont, “Epipolarplane image analysis: An approach to determining structure from motion,” in INTERN..1. COMPUTER VISION, 1987, pp. 1-7.

[3] K. Honauer, O. Johannsen, D. Kondermann and B. Goldluecke, “A Dataset and Evaluation Methodology for Depth Estimation on 4D Light Fields,” in Asian Conference on Computer Vision, Springer, 2016.

Student: Roderick Micallef

Supervisor: Dr Inġ. Reuben Farrugia

Course: B.Sc. (Hons.) Computing Science