Nowadays, automation using artificial intelligence is being introduced across a wide spectrum; from households to large enterprises. Analysing human emotion from facial expressions may be perceived as an easy task for human beings, but the possibility of uncertainties still exists and can be completely subjective in some cases. Automatically identifying human emotions is even trickier and it has been a known problem in computer vision. This human-computer interaction has yet to reach the performance levels of other computer vision recognition and classification challenges such as face, age and gender recognition.

Multiple research endeavours have been concluded in an effort of obtain good performance metrics of emotion recognition in un-posed environments [1], [2], [3]. Despite these works, it still remains an open research problem as it has not reached the quality of other classification tasks. The challenge lies within the amount of data needed to train such deep learning architectures and the respective quality. This report describes an emotion recognition model whose main aim is to use artificial intelligence to classify emotions from static facial images. This will allow automatic machine classification of fundamental facial expressions.

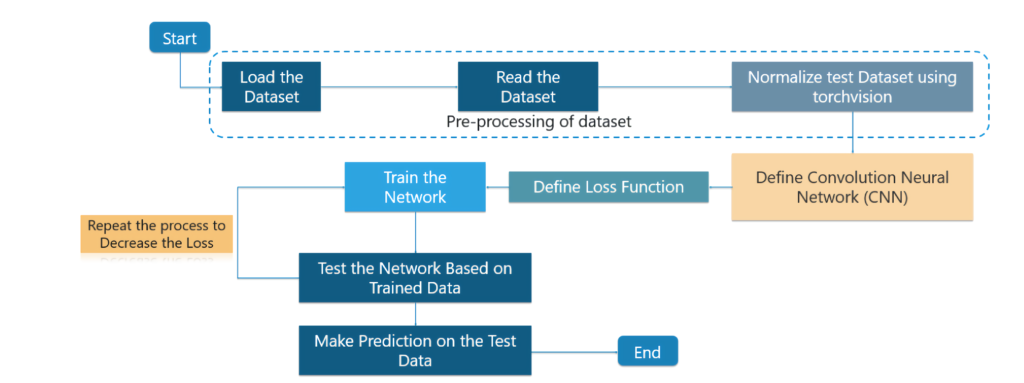

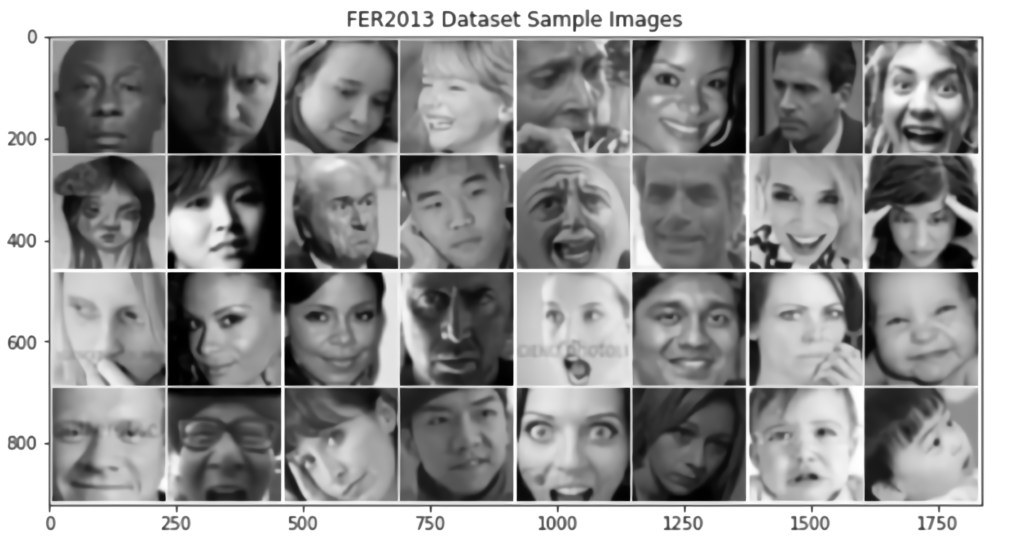

In this work, two Convolutional Neural Network (CNN) architectures, VGG-Face and ResNet-18, will be trained using transfer learning methodologies for the purpose of recognizing emotion from static images. These neural architectures will be evaluated on two different datasets, AffectNet [4] and FER2013 [5], which pose different challenges. The main aim is to discover in more detail the primary challenges of this research problem and implement a deep learning architecture for classification.

The implemented VGG-Face and a modified ResNet-18 architecture achieved a top-1 accuracy of 71.2% and 67.2% respectively on FER2013 Dataset. Results on the AffectNet Dataset were of 58.75% with VGG-Face and 55.2% with a modified ResNet-18. This demonstrated that transfer learning from a close- point is a highly effective method to obtain better performance without the need to fully train a network. Higher accuracies were achieved in detecting some individual emotions. Furthermore, from these results, it can be concluded that certain emotions were incorrectly identified by the classifier.

References

[1] M. Pantic and L. J. M. Rothkrantz, “Facial action recognition for facial expression analysis from static face images,” IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics) vol. 34, pp. 1449–1461, June 2004.

[2] A. Ali, S. Hussain, F. Haroon, S. Hussain, and M. F. Khan, “Face recognition with local binary patterns,” 2012

[3] G. Levi and T. Hassner, “Emotion recognition in the wild via convolutional neural networks and mapped binary patterns,” in Proc. ACM International Conference on Multimodal Interaction (ICMI), November 2015.

[4] Mollahosseini, B. Hassani, and M. H. Mahoor, “Affectnet: A database for facial expression, valence, and arousal computing in the wild,” CoRR, vol. abs/1708.03985, 2017

[5] Challenges in representation learning: A report on three machine learning contests,” in Neural Information Processing, (Berlin, Heidelberg), pp. 117–124, Springer Berlin Heidelberg, 2013.

Student: Chris Bonnici

Supervisor: Dr. Inġ. Reuben Farrugia

Course: B.Sc. (Hons.) Computer Engineering