Artificial Neural Networks and Deep Learning approaches have proven their capabilities in in-air imagery [1, 2, 3, 4] and, as a result, this has sparked an interest to use these approaches and train these same models on underwater images [2]. However, collecting a large enough dataset is a tedious task which is often deemed infeasible, while attempting to train a model on a small sample size will lead to over-fitting. Overcoming these challenges would render this technology useful for a variety of different fields ranging from the environmental, through ocean clean ups; the economical, through pipeline inspections; the historical, through underwater archaeology; and a variety of other fields.

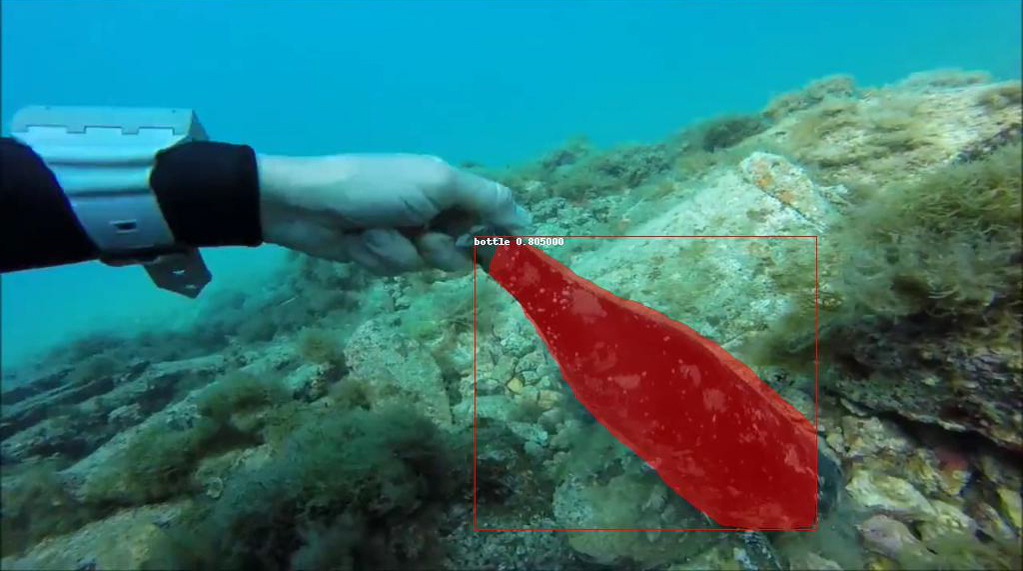

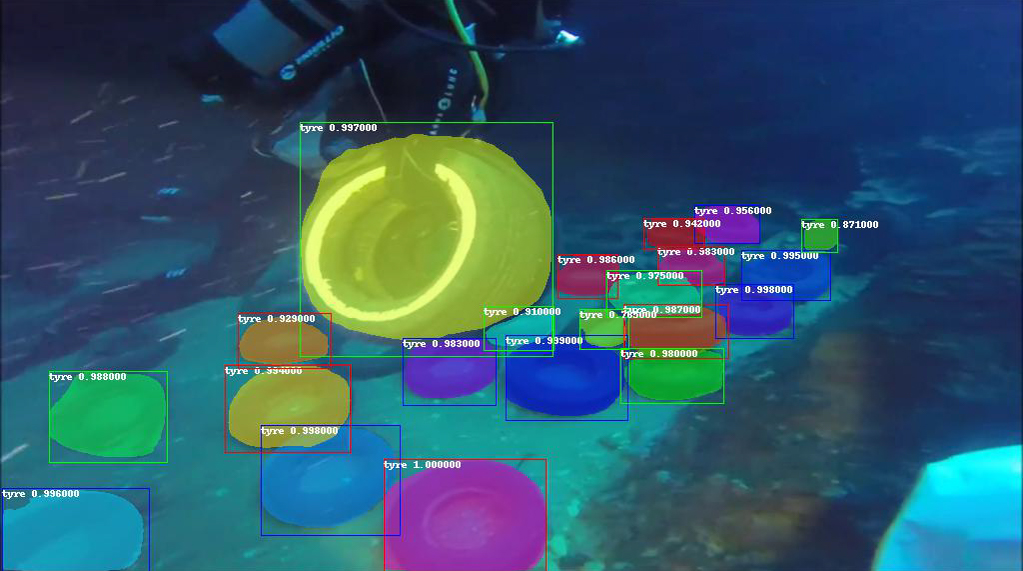

To overcome the problem of over-fitting, the approach taken in this project was to use a transfer learning technique, with the argument that Convolutional Neural Networks (CNN) are not only classifiers but are also feature extractors. Hence, a CNN trained on a large dataset of in-air images will be sufficient to classify objects in underwater scenes after some fine-tuning using images taken underwater, since the pre-trained model will already be sensitive to information such as colours, textures and edges [3, 1, 5]. Since no dataset was available, images had to be gathered and annotated manually. This dataset was divided into a 70:30 ratio to obtain a training set and a test set. The model was trained to be able to detect and classify two classes of objects: bottles (made from both glass and plastic) and tyres.

Mask R-CNN [6] is the model chosen for this project, which was pre-trained on the COCO dataset [7]. Mask R-CNN makes use of ResNet-FPN as the feature extractor. These features are then passed to the first of two stages: the Region Proposal Network (RPN), which proposes candidate boxes. These are in turn passed onto the second stage, which outputs the class, bounding box refinement, and mask for each of the candidate boxes.

The model was evaluated using Mean Average Precision, and the results obtained, while not surpassing the current state-of- the-art, were promising. The final mAP achieved over all classes was of 0.509, where APs of 0.616 and 0.442 were achieved for the bottles and tyres classes respectively.

References

[1] X. Yu and X. Xing and H. Zheng and X. Fu and Y. Huang and X. Ding, “Man-Made Object Recognition from Underwater Optical Images Using Deep Learning and Transfer Learning,” 2018 IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2018, Calgary, AB, Canada, April 15-20, 2018, pp. 1852–1856, 2018.

[2] Y. Gonzalez-Cid and A. Burguera and F. Bonin-Font and A. Matamoros, “Machine learning and deep learning strategies to identify Posidonia meadows in underwater images,” in OCEANS 2017, Aberdeen, 2017.

[3] L. Jin ,H. Liang, “Deep learning for underwater image recognition in small sample size situations,” in OCEANS 2017, pages 1-4, Aberdeen, 2017.

[4] O. Py and H. Hong and S. Zhongzhi, “Plankton classification with deep convolutional neural networks,” IEEE Information Technology, Networking, Electronic and Automation Control Conference, pp. 132-136, 2016.

[5] D. Levy and Y. Belfer and E. Osherov and E. Bigal and A. P. Scheinin and H. Nativ and D. Tchernov and T. Treibitz, “Automated Analysis of Marine Video With Limited Data,” in 2018 IEEE Conference on Computer Vision and Pattern Recognition Workshops, CVPR, pages 1385-1393, Salt Lake City, 2018.

[6] K. He and G. Gkioxari and P. Dollàr and R. B. Girshick, “Mask R-CNN,” in IEEE International Conference on Computer Vision, ICCV, pages 2980-2988, Venice, 2017. [7] T. Lin, M. Marie, S. J. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollàr, C. L. Zitnick, “Microsoft COCO Common Objects in Context,” in Computer Vision – ECCV 13th European Conference, pages 740 – 755, Zurich, 2014

Student: Stephanie Chetcuti

Supervisor: Prof. Matthew Montebello

Co-Supervisor: Prof. Alan Deidun

Course: B.Sc. IT (Hons.) Artificial Intelligence