Digitisation, globalisation and the prevalence of English have led to increased accessibility for information, education, research and international funds and programs. At first, that may sound like a purely positive development. However, unfortunately, many languages, especially languages with few speakers, can get left behind in research. This project focussed on a particularly interesting aspect of the Maltese language: inflection. Recent research in the domain of inflection is analysed with a special focus on the Maltese context. The project tries to answer the question of whether Neural Networks with an encoder-decoder architecture are sufficient for solving the complete Maltese inflection.

Inflection is a characteristic of many languages. it is the process of changing a lemma into another form to represent different grammatical functions. Maltese is a language with very complex inflection for verbs with changes in the suffix, the infix and also the prefix. The changes can convey number, gender and object relations, just to name a few. The process of transforming a lemma into the inflected form can essentially be reformulated as a sequence-to-sequence translation task.

Inflection can be either solved by linguists’ hand-crafting of language-specific rules, or by creating a machine-learning system that is able to learn from sample inflections and can generalise well enough for previously unseen lemmas. Recent research for systems that learn to generalise from training samples, show promising results using Neural Networks, with accuracies for inflection approaching 100%.

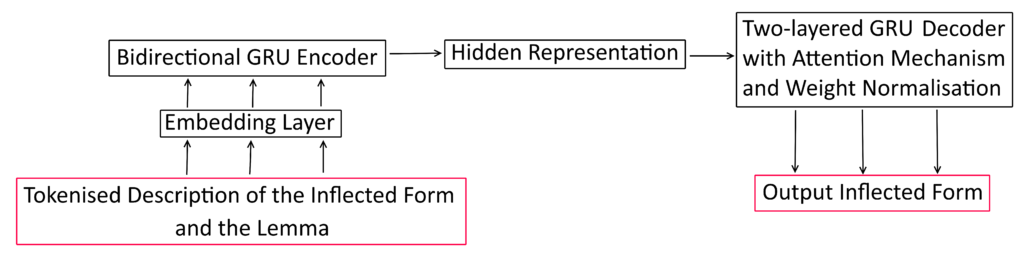

The FYP’s system takes a lemma and a grammatical description of the inflected form as input and produces the inflection from it. To do so, the system tokenises and feeds the

description and the lemma into a neural network consisting of an encoder and a decoder part. Encoder-decoder structures can be used in a variety of ways. Here, both parts are recurrent neural networks to be able to handle the sequential nature and varying lengths of the input and output. More specifically, the encoder is a bidirectional gated recurrent neural network, whereas the decoder is a two-layered gated recurrent neural network. There is also an embedding layer, an attention mechanism and weight normalisation.

The system was trained on a few different datasets to compare the results to previous research in the domain and to answer the question of the FYP. Most notably, it was trained on a dataset consisting of sample inflections from the complete set of inflections of Maltese, called the paradigm. This paradigm includes over 1000 inflections for every verb and a few for every noun and adjective.

When using the same training dataset, the system wasn’t quite able to achieve the same very high accuracies as previous research (95%), only reaching an accuracy of 88,88%. This could be due to a difference in the architectural setup of the neural system. When the system was trained on the full paradigm, the accuracy further decreased to 71,6% -a significant decrease which reflects the complexity of Maltese morphology. Although the results obtained are not as high as originally expected, it is not reason enough to completely root out these encoder-decoder architectures for the complete inflection of Maltese. This project has shown the difficulty of dealing with Maltese morphology and that further work is required to find a more appropriate neural architecture for the processing of Maltese.

Student: Georg Schneeberger

Supervisor: Dr Claudia Borg

Course: B.Sc. IT (Hons.) Artificial Intelligence