Decision trees are models whose structure allows for tracing an explanation of how the final decision was taken. Neural networks known as ‘black box’ models do not readily and explicitly offer an explanation of how a decision was reached. However, since Neural Networks are capable of learning knowledge representation, it will be very useful to interpret the model’s decisions.

In this project, the Visual Relationship Detection problem will be explored in the form of different Neural Network implementations and training methods. These implementations include two Convolutional Neural Network architectures (VGG16 and SmallVGG) and two Feed Forward Neural Networks trained using Geometric features and Geometric with Language Features. These models will be treated as two kinds of problems:

one is the Multi-Label Classification problem and the other is the Single-Label Classification problem.

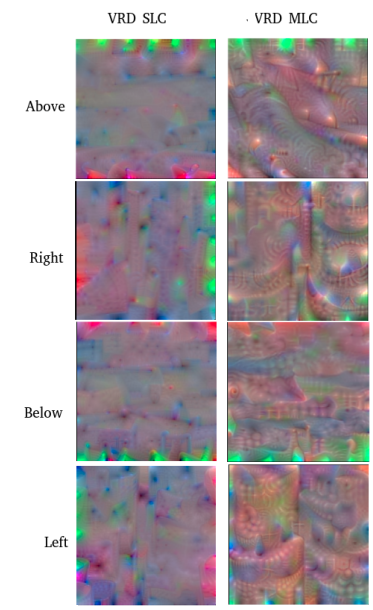

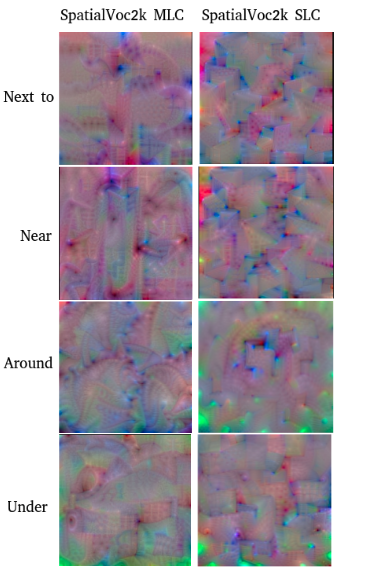

Activation Maximisation will be used to interpret the different Convolutional Neural Networks under different training methods by maximizing a specific class output to visualize what it is learning. This study is grounded in the recognition of spatial relations between objects in images. Activation Maximization will shed light on what models are learning about objects in 2D images, which should give insight into how the system can be improved. The spatial relation problem is one where, given a subject and an object, the correct spatial preposition is predicted. This problem extends beyond just predicting one correct spatial preposition as there are multiple possible relationships between two objects.

Student: Vitaly Volozhinov

Supervisor: Prof. Inġ. Adrian Muscat

Course: B.Sc. (Hons.) Computing Science