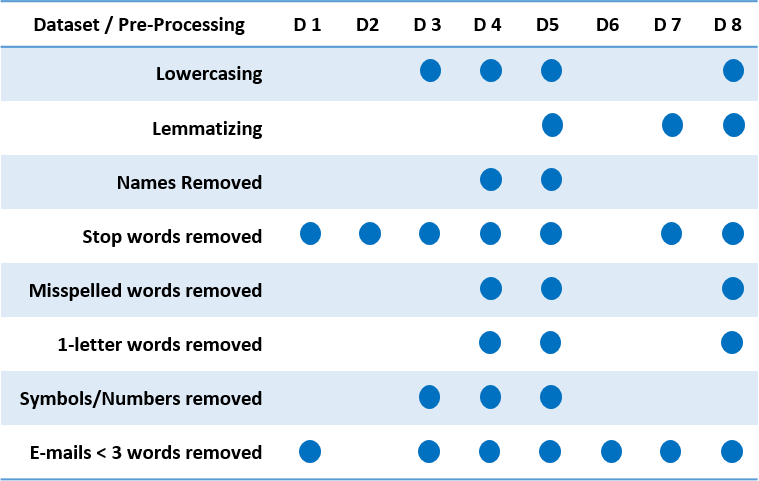

It is estimated that, worldwide, around 246.5 billion e-mails are sent every day [1]. E-mail communication is used by billions of people every day and is a mission-critical application for many businesses [1]. E-mail users often feel that they are ‘drowning’ in e-mails and may start to lose track of what types of e-mails they have in their inbox. The principal aim of this study is to investigate the effect that text pre-processing, input encodings, and feature selection techniques have on the performance of various machine learning algorithms applied to automatic categorization of e-mails into given classes. As a reference point, we used the work done by Padhye [2] who, in her Masters dissertation, compared the performance of 3 supervised machine learning algorithms – Support Vector Machine, Naïve Bayes, and J48 for the purpose of e-mail categorization. Padhye used the Enron e-mail dataset for this purpose. The data was manually labelled by Padhye herself into 6 main categories. The categories are; Business, Personal, Human-Resources, General-Announcements, Enron-Online and Chain-Mails. Padhye used the WEKA libraries to implement the 3 classification algorithms. Using Padhye’s results as a baseline, we experimented with different encoding schemes by applying different text pre-processing (Figure 1) and feature selection techniques. Significant improvements were achieved on the results obtained in [2]. The most significant improvement obtained over

that of Padhye [2], was 17.67%, for the Business versus Personal versus Human Resources classification. Unexpectedly, uni-gram features performed better than bi-grams, in our experiments. The average accuracy was increased by 12.76%, when compared to the average accuracy obtained by Padhye in [2].

Additional experiments were conducted to compare the difference in accuracy when using normalized and non- normalized TF-IDF values. Normalized TF-IDF values, in general, performed better. A novel classification algorithm, which makes use of pre-built class models to model different classes available in the dataset, was also proposed. During the classification, an unseen e-mail is compared to each class model, giving different scores to each model according to the similarity between the e-mail and the model. The class model which obtains the highest score is considered to be the category that the particular e-mail should be classified in. The custom classifier also gave better classification accuracy when compared to the results obtained by Padhye – albeit not as good as those obtained by the WEKA algorithms and our feature selection techniques. An average accuracy improvement of 5.13% was obtained when compared to Padhye’s results.

The results also highlighted the importance of selecting the optimal set of features for a machine learning task.

References

[1] The Radicati Group Incorporation, “Email-Statistics-Report, 2015-2019”, A Technology Market Research Firm, Palo Alto, CA, USA, 2015.

[2] A. Padhye, “Comparing Supervised and Unsupervised Classification of Messages in the Enron Email Corpus”, A M. Sc. Thesis submitted to the Faculty of the Graduate School, University of Minnesota, 2006. Accessed on: 13-Oct-2019. [Online]. Available: http://www.d.umn.edu/tpederse/Pubs/apurva-thesis.pdf

Student: Steve Stellini

Supervisor: Dr John Abela

Course: B. Sc. IT (Hons.) Software Development