This final-year project delivers a study comparing varying artificial intelligence (AI) bots at work, when playing the online strategy game Pokémon Showdown, a web-based Pokémon battle simulator.

The first step consisted in explaining the mechanics of Pokémon battling and how it has evolved throughout the years. The next step required proposing two algorithms to be assigned to a bot respectively. These bots were intended to compete on Pokémon Showdown, which served as an optimal environment to allow these bots to compete against online players, where a set number of battles took place and were recorded. The comparison of the two algorithms was done through the Elo rating provided by the platform. The more successful the algorithm, the higher the win rate, and the higher the Elo rating. The study also evaluated the win rate of these bots, together with the advantages and disadvantages they experienced. This was expected to provide better insight into the larger challenges that each of the algorithms encountered, and which one of them performed better and why. Such an assessment would provide the basis for proposals for future work.

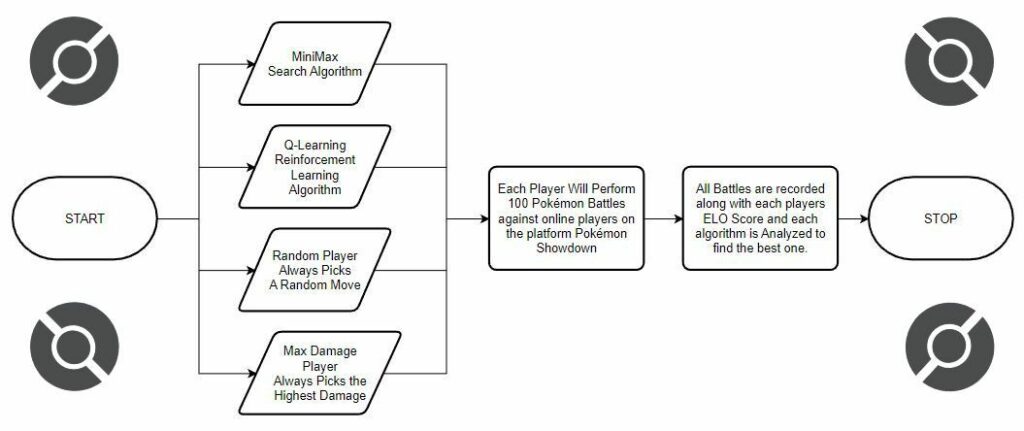

The two algorithms adopted for this study were: a) the Minimax algorithm, which is a search algorithm that could seek future possible turns and prioritise according to the safest route available and b) the Q-learning algorithm, that could evaluate the results of previous battles in order to learn after each one. These bots were then compared to each other, along with two other bots referred to as the ‘simple’ bots. These bots were: a) a random bot that opted for a move on a random basis, without any discernible logic, and b) a high-damage bot that tended to opt for the most damaging move, regardless of the actual battle taking place.

The Minimax bot was expected to have a high win rate in the earlier games but would struggle upon encountering tougher opponents which may be able to predict the safer routes., On the other hand, the Q-learning bot was expected to plateau as it did not have any knowledge to fall back on at the beginning. The simple bots were introduced in order to highlight the effectiveness of Minimax and Q-learning in comparison, these being expected to have a very low win rate and Elo rating.

Following the implementation process, the performance of two primary AI algorithms was evaluated within the context of competitive role-playing games (RPGs), specifically in Pokémon battles. The resulting analysis determined that a search algorithm such as Minimax had a higher success rate than a reinforcement learning (RL) algorithm such as Q-learning, since they were competing in an uncontrolled online environment.

The outcome of this experiment was that neither of the two algorithms registered any huge success in the competitive scene. However, Minimax struggled less than Q-learning when it came to adapting to various different online users, and therefore enjoyed a higher win rate. Furthermore, Q-learning tended to struggle in the early stages, as it had no prior knowledge whatsoever and since, after losing, it kept being paired with players with lower Elo ratings, it could never improve.

Figure 1. Flowchart of the 4 algorithms employed in the project

Student: Nathan Bonavia Zammit

Supervisor: Dr Kristian Guillaumier