Advancements in technology have led to new image and video-capturing devices that can record the whole surrounding. Such devices are called 360-degree or omnidirectional video cameras. Moreover, 360-degree video cameras operate at much higher data rate than traditional video cameras, as the data recorded consists of four times more horizontal pixels compared to a typical video. Therefore, effective video-streaming and encoding technology is required to transmit a video stream of such magnitude over a wireless connection.

Most 360-degree cameras currently available on the market transfer the stream of data to a nearby device over a wireless fidelity (Wi-Fi) connection. A problem surrounding this technology occurs when the camera moves further away from the Wi-Fi device, thus weakening the connection and impacting the quality of the received video stream. A solution for such an issue would be to opt for video streaming through a long-term evolution (LTE) connection, as such networks provide better radio coverage that allows for consistent data-transmission rates.

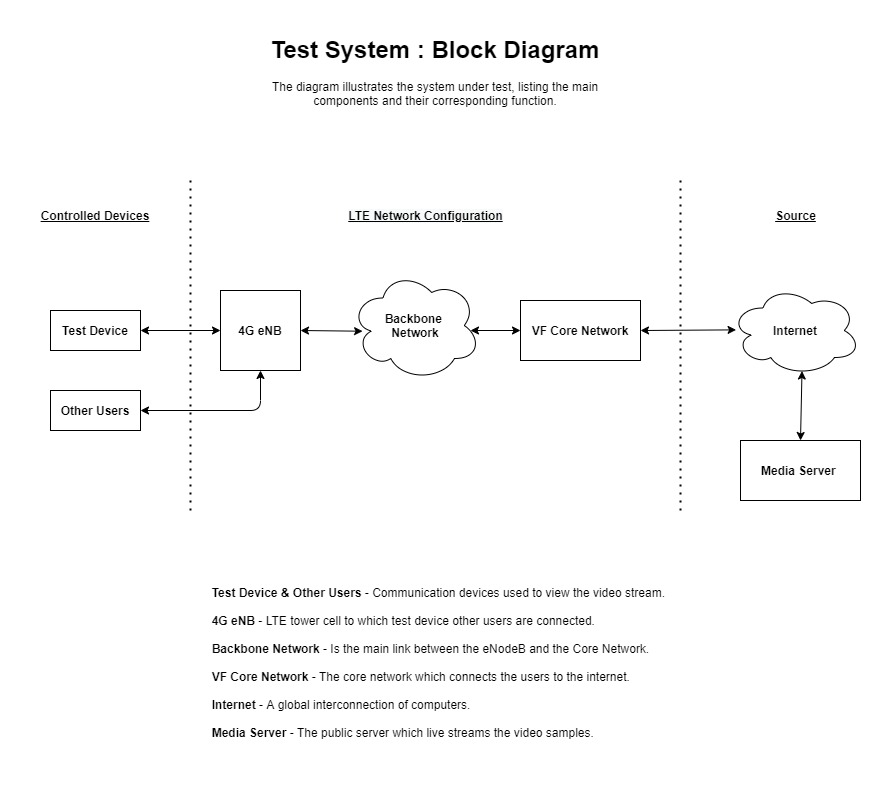

This project involved reviewing a standard approach towards streaming 360-degree live video through LTE technology. The setup consisted of a local cell (eNodeB) that received an ongoing stream of video data from a public server connected to the internet. A set of three different video samples – which differ in bitrate depending on the chosen encoding scheme ‒ was used throughout the research. Moreover, each video sample was transmitted at three different resolutions (FHD, UHD and 4K), thus covering every possible variation that could occur in the videos.

Additionally, a series of both hardware and software techniques were implemented to emulate multiple users and external interferences, as the setup offered a perfect connection unlike real-world scenarios. Hardware techniques included the introduction of attenuation on the signal itself, thus representing signal degradation as the device moved further away from the radio cell. Moreover, dedicated software was utilised to introduce background traffic, as typically the same cell delivers and receives other data from different users, and also to effectively simulate multiple users connecting to the same server. As regards optimal quality of experience (QoE), the system requires that the source upload and user download bandwidths should always be greater or equal to the video-stream bitrate, multiplied by the number of users watching the stream. Furthermore, to determine QoE, the received video was compared with the reference set at the server. This was done through a series of objective measures such as bitrate, peak signal- to-noise ratio, and structural similarity for different setup configurations.

In conclusion, after processing the gathered information, it was found that the statement previously mentioned with regard to system requirements only holds true in perfect network conditions. As traffic and network attenuation were introduced, the stream suffered drastically and QoE deteriorated. This was due to the fact that the network bandwidth was being overloaded with information, which resulted in data packets being lost in transmission. In order to avoid such occurrences, it would be necessary to always leave a portion of the network bandwidth unused, and the signal-to-noise ratio of the received signal should also be above a predefined level, depending on the system being used.

Student: Damian Debono

Course: B.Sc. IT (Hons.) Computer Engineering

Supervisor: Prof. Ing. Carl James Debono

Co-supervisor: Dr. Mario Cordina