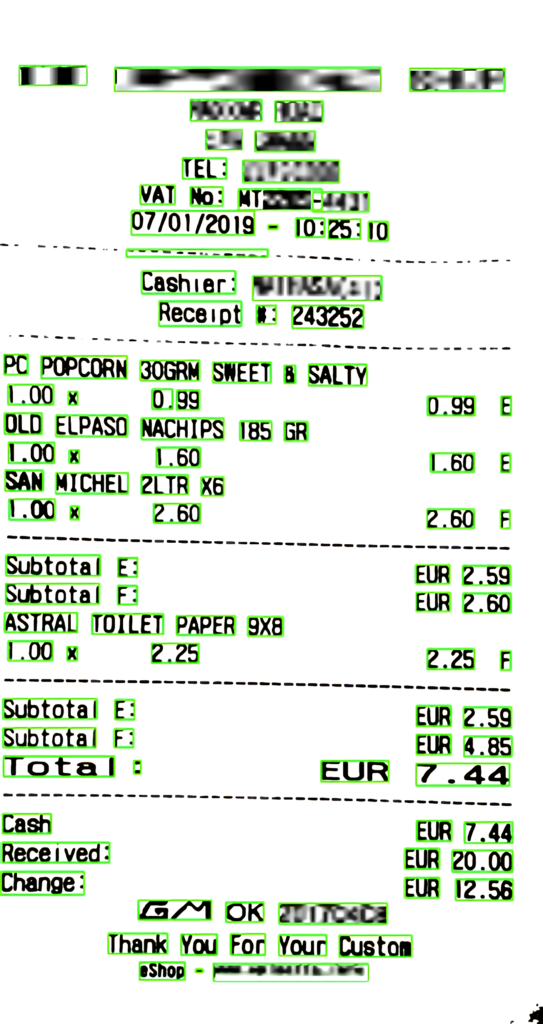

In recent years, with the rise of computational power and the growing popularity of artificial intelligence, the fields in data analysis have increased significantly. Big leaps forwards have been made with regard to object detection, such as locating a receipt in an image and in computer vision more generally. However, data analysis for unstructured data, such as parsing text from a receipt, remains a challenge and little research has been conducted in this area. Receipts are especially challenging since there is no standard format for text placement or keywords such as ‘total’, which differ from one vendor to another. The dissertation discusses and explores an image-based and a text- based implementation in order to extract key details such as Shop name, Date of the receipt, VAT number and Total from a receipt.

The aim of this project is to segment a document, more specifically, a receipt, and extract important information from the image. Important information could include the company/ shop name, shop information, items, pricing, date and total.

This system can be used in for example;

(a) data analytics, and

(b) to facilitate data input, through the automatic detection and completion of the fields.

An image-based model was first created using five layers of convolutional neural networks trained specifically to search for a shop name, VAT number and total amount. The second implementation created consists of a two-part network, both parts using an LSTM layer, where the characters extracted using an OCR are analysed by the network and the input is classified as a shop name, date, VAT, total or listed as “other”.

The image-based model acts as a proof of concept and with enough time and training data, it could be a viable solution in the future. On the other hand, the text-based model has managed to yield promising results. The tests conducted include a comparison of this model with two other existing products on the market and the results are considered a success.

Student: Kurt Camilleri

Supervisor: Prof. Inġ. Adrian Muscat

Co-Supervisor: Dr Inġ. Reuben Farrugia

Course: B.Sc. (Hons.) Computer Engineering