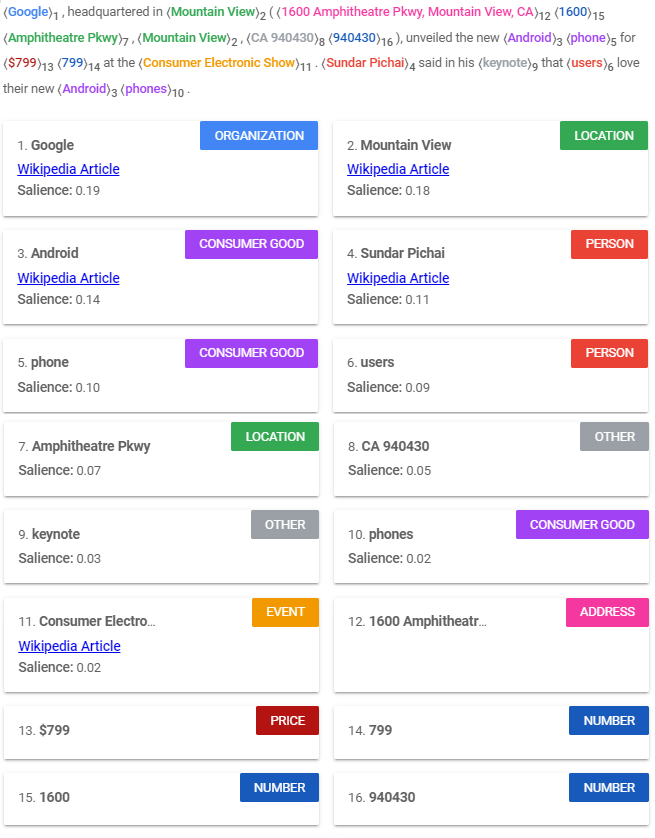

Named-entity recognition (NER) is a subtask in the field of natural language processing (NLP), whereby named entities such as ’person’, ‘organisation’ and ‘location’ are identified and labelled in text. NER is a substantial contribution to information extraction, as it identifies the named entities from which the required information could be extracted, such as how they relate to each other. This is also known as ‘entity linking’.

In general, state-of-the art NERs are trained on large corpora, with named entities already tagged through human-annotation initiative. However, not all languages have the benefit of a sufficiently large corpus. In fact, Maltese lacks both available NER-annotated datasets and previously created Maltese NER models. Hence, the aim of this study was to conduct research about previous low- resource NERs to obtain enough knowledge to contribute towards resolving the challenging task of creating and evaluating the first NER system for Maltese.

In this project, a small dataset of 500 sentences extracted from the MLRS corpus was created. Sentences are manually annotated at word level using the following categories: ‘Person’, ‘Location’, ‘Organisation’ and ‘Miscellaneous’. These are further annotated using the BIO tagging system which indicates whether the words are found in the (B)eginning, (I)nside or (O)utside of a named entity. In order to broaden the dataset, transfer learning was tentatively applied by including datasets from other languages, namely English, Italian, Spanish and Dutch.

The experiments sought to evaluate the use of two techniques – Conditional Random Fields (CRF) and bidirectional long short-term memory conditional random fields (BiLSTM-CRF) as a deep learning approach. These experiments also required considering a number of scenarios, since there were no specific annotation guidelines for Maltese. Initially, tags were limited to the ‘Person’, ‘Organisation’ and ‘Location’ labels, with a later introduction of the ‘Miscellaneous’ tag for further experimentation. It was also necessary that analysis of the tags would match what was available in the selected multilingual NER datasets to streamline the transfer learning for the Maltese annotations. We also experimented with the size of the multilingual corpora to analyse the impact that other languages could have

on Maltese NER system. This was done incrementally with the first corpus containing Maltese only, and then the others containing one of the following amounts of sentences from each language: 200, 300, 400 and 500.

This project has sought to demonstrate the feasibility of transfer learning in training an NER system for Maltese in a low-resource setting. The experiments resulted in a large number of setups, totalling 40 distinct experiments. The best results were obtained by three equally successful systems achieved by the BiLSTM- CRF experiments. One of these systems was trained on Maltese and 300 extra sentences from the other languages without making use of the ‘Miscellaneous’ tag. The other two systems were trained on Maltese, together with 400 and 500 extra sentences from all the other languages, excluding Dutch.