This project aims to explore and develop an autonomous drone-delivery system. This system was implemented within a simulated environment called AirSim [1] which mimics a real-life urban neighbourhood. To develop such a system, it was necessary to develop a number of processes to ensure safe use. In this project, it was deemed best to focus on navigation, obstacle avoidance and autonomous-landing-spot detection.

Navigation of the drone works by giving the appropriate coordinates to the flight controller, and in turn the flight controller (implemented within AirSim) takes control of the drone and flies it towards the desired coordinates. During its ‘journey’, the drone would be required to perform obstacle avoidance to ensure a safe flight.

The approach chosen for obstacle avoidance involved using a depth map to check whether an obstacle would be present directly ahead of the drone’s path. The depth map was created by using the depth-estimation system, MonoDepth2 [2], which makes use of a convolutional neural network (CNN) to estimate depth from a single image. From the depth map, obstacles could be identified to enable the drone to take any necessary evasive manoeuvres.

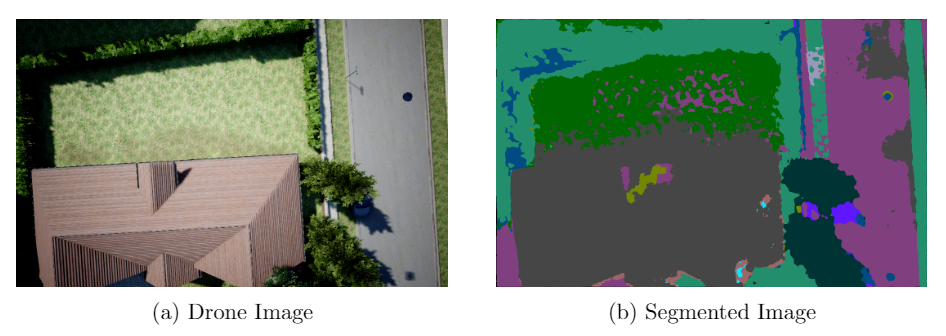

The method created for autonomous landing entailed the use of a technique known as semantic image segmentation. This technique splits an image into segments, with each segment being given a different colour code that corresponds to an object or a material [3]. By applying this process to the image captured by the drone’s bottom-facing camera, any surfaces that would be considered safe landing surfaces (e.g., a paved area) could be identified. An example of a segmented image could be seen in Figure 1.

A web application was also developed to simulate a drone delivery app, through which a user could create a delivery and monitor its status. This interface may be seen in Figure 2.

References/Bibliography

[1] S. Shah, D. Dey, C. Lovett, and A. Kapoor, “AirSim: High-Fidelity Visual and Physical Simulation for Autonomous Vehicles,” Field and Service Robotics. pp. 621–635, 2018 [Online]. Available: http://dx.doi.org/10.1007/978-3-319-67361-5_40

[2] C. Godard, O. M. Aodha, M. Firman, and G. Brostow, “Digging Into Self-Supervised Monocular Depth Estimation,” 2019 IEEE/CVF International Conference on Computer Vision (ICCV). 2019 [Online]. Available: http://dx.doi.org/10.1109/iccv.2019.00393

[3] S. Minaee, Y. Y. Boykov, F. Porikli, A. J. Plaza, N. Kehtarnavaz, and D. Terzopoulos, “Image Segmentation Using Deep Learning: A Survey,” IEEE Trans. Pattern Anal. Mach. Intell., vol. PP, Feb. 2021, [Online]. Available: http://dx.doi.org/10.1109/TPAMI.2021.3059968

Course: B.Sc. IT (Hons.) Artificial Intelligence

Supervisor: Prof. Matthew Montebello