Marine litter is leaving a highly negative impact on oceans, since plastics do not biodegrade and remain intact for centuries, making it essential to monitor the sea and beaches for any litter, while providing relevant knowledge to develop a long-term policy to eliminate litter [1]. Furthermore, the unavoidable decay of the aesthetic significance of beaches would inevitably result in a reduction of profits from the tourism sector, as well as bringing about higher costs in the clean-up of coastal regions and their surroundings. In order to avert these situations, unmanned aerial vehicles (UAVs) could be used effectively to identify and observe beach litter, since they make it possible to readily monitor an entire beach, while convolutional neural networks (CNNs) combined to the drones could classify the type of litter that would have been detected.

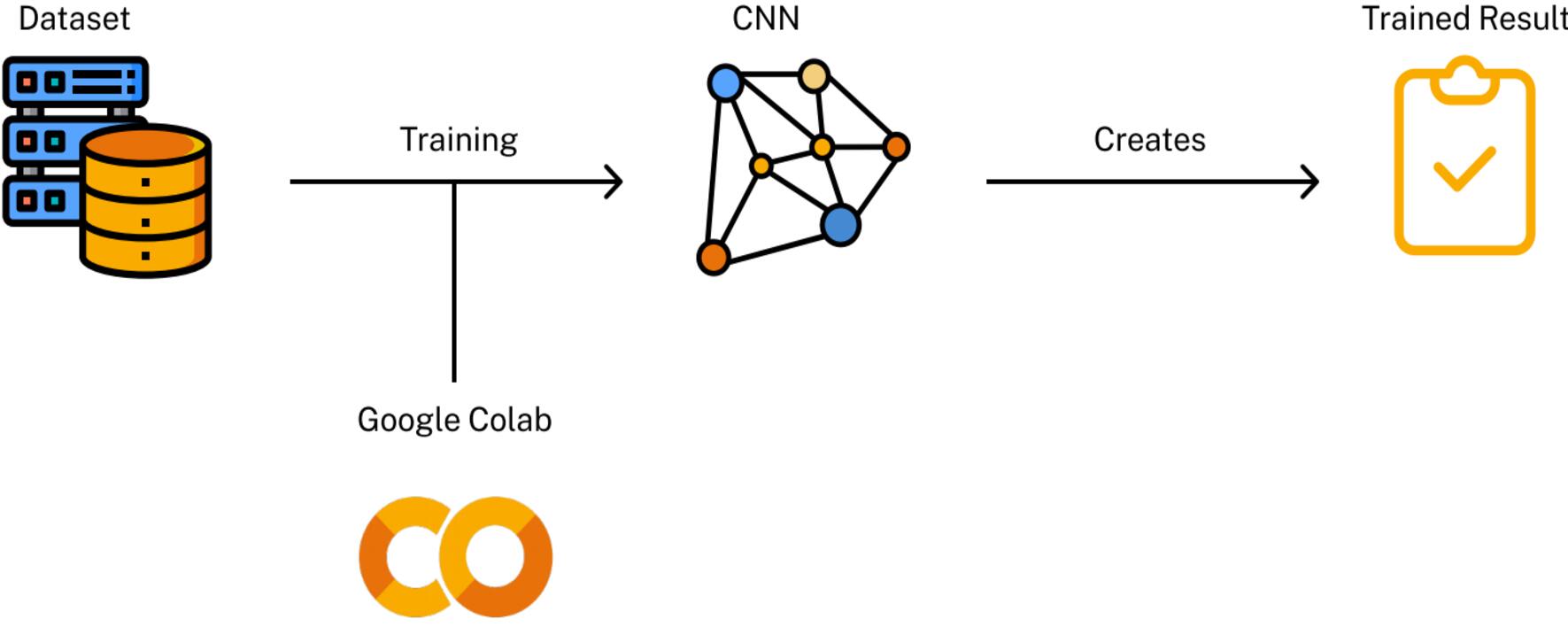

This study sought to evaluate approaches that can be used for a litter-object detection by using highly efficient models. Object detection refers to estimating the locations of objects in each image, while labelling them with rectangular bounding boxes [2]. The process of the solution began by gathering a custom dataset of different types of litter, which were then used for training the model, as may be seen in Figure 1. The dataset incorporated four types of litter, namely: aluminium cans, glass bottles, PET (polyethylene terephthalate) bottles, and HDPE (high-density polyethylene) plastic bottles.

Once the appropriately trained results were achieved for each object-detection model, the results could be compared by using widely known standards in order to determine which model would be the most accurate. The Tello EDU drone was then used to capture video footage, from which the detections could be made. The trained model was finally inputted to a primary system that controlled the drone and, in return, accepted the video feed captured by the drone.

This experiment achieved satisfactory results, as both the models implemented were efficient. However, the Tiny-YOLOv3 model proved to be more useful, as it performed better on videos due to its fast nature and capability to require less hardware by occupying less memory space [3]. Moreover, the project could be further implemented in the future by incorporating more litter types.

References/Bibliography

[1] A. Deidun, A. Gauci, S. Lagorio, and F. Galgani, “Optimising beached litter monitoring protocols through aerial imagery,” Marine Pollution Bulletin, vol. 131, pp. 212–217, 2018

[2] Z. Zhao, P. Zheng, S. Xu, and X. Wu, “Object detection with deep learning: A review,” IEEE Transactions, 2019.

[3] J. Redmon and A. Farhadi, “Yolov3: An incremental improvement,” April 2018.

Course: B.Sc. IT (Hons.) Software Development

Supervisor: Prof. Matthew Montebello

Co-supervisor: Dr Conrad Attard