DFA learning is the process of identifying a minimal deterministic finite-state automaton (DFA) from a training set of strings. The training set is comprised of positive and negative strings, which respectively do and do not belong to the regular language recognised by the target automaton.

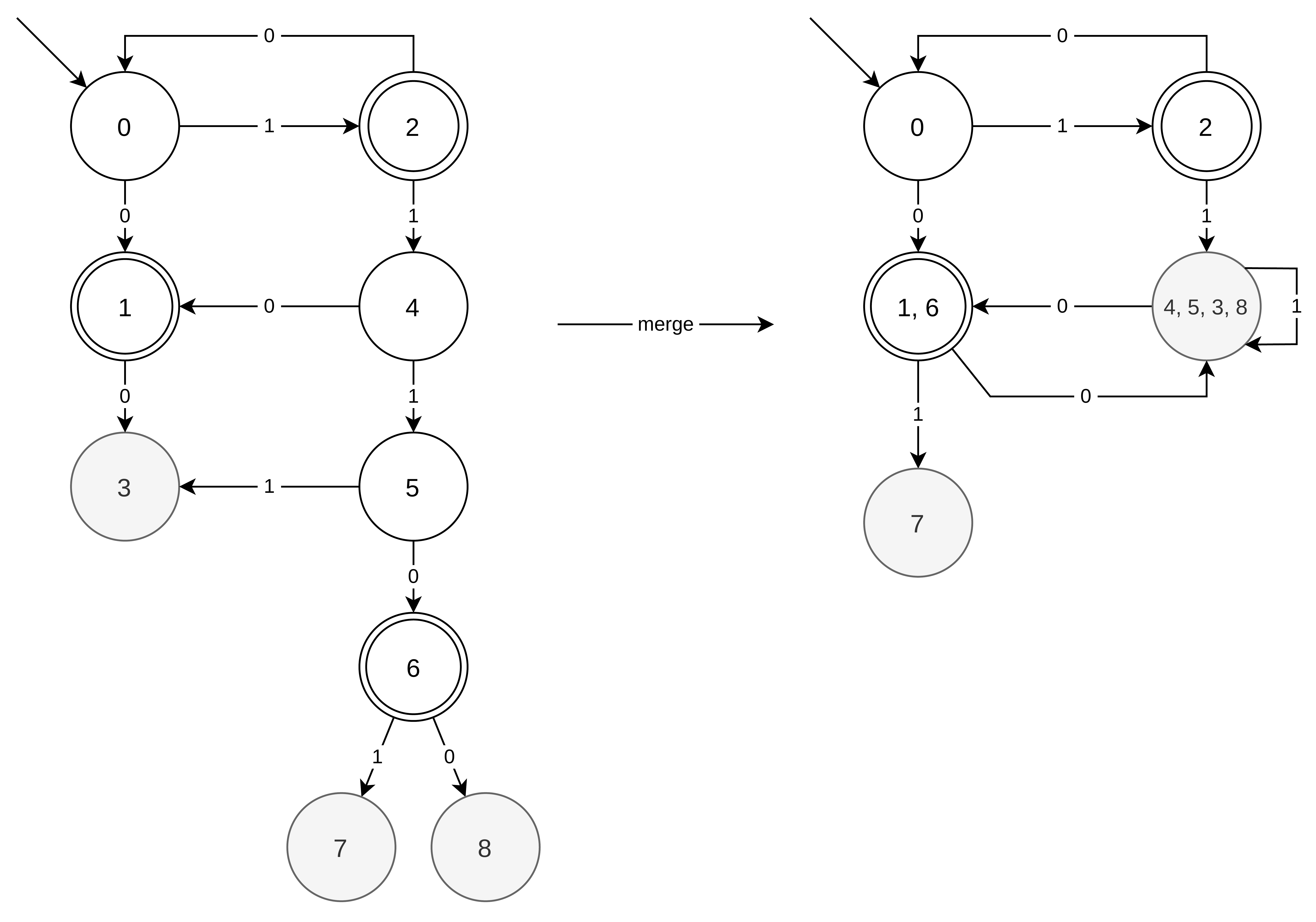

This problem is NP-hard and is typically accomplished by means of state-merging algorithms. The algorithms in this family all depend on the deterministic state-merging operation, which combines two states in a DFA to create a new, smaller automaton. These algorithms could be broadly classified as non-monotonic (e.g., SAGE, EdBeam, DFA SAT, automata teams) or monotonic (e.g., EDSM, blue-fringe, RPNI) which respectively do and do not allow backtracking. When running, these algorithms would perform many millions of merges with non-monotonic algorithms performing significantly more merges than monotonic algorithms. In both cases the deterministic state merging operation is a significant bottleneck.

This project was motivated by the need to alleviate this bottleneck through a faster state-merging operation. Achieving this would assist researchers in dealing with harder problems, run more sophisticated heuristics and perform more meaningful analyses by running their tests on a larger, more statistically significant pools of problems.

With the main goal of identifying the fastest implementation, this project examined a number of implementations of the state-merging operation with state-of-the-art DFA learning algorithms on a large pool of problems. To achieve this, existing implementations of the state-merging operation, such as FlexFringe and StateChum, were investigated to evaluate the typical state merging implementations. This involved studying the extent to which it might be possible to take advantage of concurrency to perform many merges in parallel, and building a novel GPU implementation of the merge operation. Additionally, the process entailed using deep profiling and various optimisation techniques related to memory-access patterns to further enhance performance. Finally, an equivalent solution was developed in multiple programming languages to minimise the falsely perceived performance increase which may be due to a better optimising compiler.

The implementation was evaluated and benchmarked on a large pool of Abbadingo-style problems. Abbadingo One is a DFA learning competition that took place in 1997 and was organised with the goal of finding better DFA learning algorithms. These problems are suitable for testing because the typical algorithms used on Abbadingo problems tend to be simple to implement and rely heavily on deterministic merge performance. The motivation behind this project was not to seek better solutions than those submitted by the winners of the competition, but to find their solutions faster.

At evaluation stage, the implementation presented in this project proved to be significantly faster than a naïve one. The operation was tested by using three DFA learning frameworks, namely: windowed breadth-first search, exhaustive search, and blue-fringe. The preliminary results indicated that the improved state-merging operation was three times as fast as the baseline.

Course: B.Sc. IT (Hons.) Artificial Intelligence

Supervisor: Dr Kristian Guillaumier

Co-supervisor: Prof. John M. Abela