Nowadays, video games are among the most popular means of entertainment available on the market, not only as the base game but also in terms of the competitive scene which these games bring with them. Games such as: Dota 2, League of Legends, Rocket League, Counter-Strike: Global Offensive, Street Fighter V and StarCraft II are among some of the games played in e-sport competitions, promising jackpots that could run into millions.

Fighting games such Street Fighter, Mortal Kombat and Tekken are among the most competitive genres in which players spend hours practising combos and playing online matches against each other.

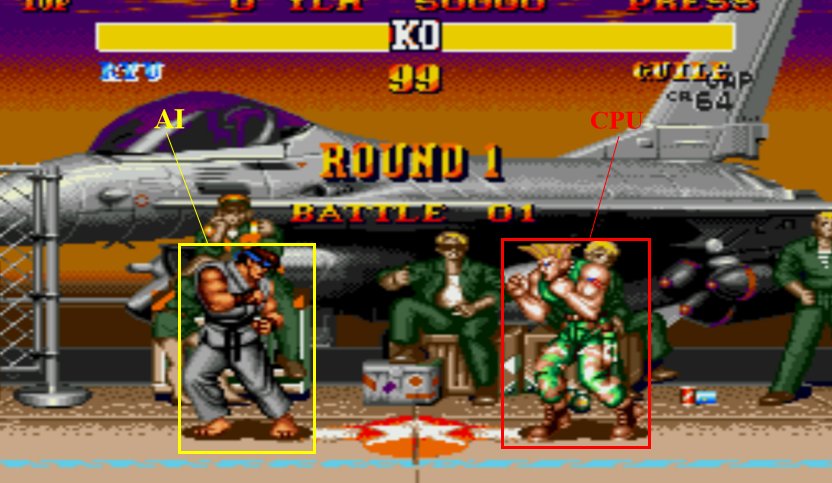

Artificial intelligence (AI) has been used frequently to defeat the single-player campaigns offered by these games. The main aim of this project was to create an AI agent capable of defeating the campaign of Street Fighter II, which is a retro 2D fighting game. Furthermore, this agent was set to fight against itself in order to better itself with each cycle. These AI agents will eventually become more difficult to beat than the bots offered by the game. Professional players would be able to make use of such agents as a new challenge towards improving themselves by fighting new formidable opponents.

The AI agents could also be programmed to continue training when fighting these professional players. The training would be carried out through a custom-reward function, which would reward the agent significantly when winning rounds and dealing damage, while issuing a small reward according to any points scored. Penalties are given when incurring damage and losing rounds. The idea behind this set-up is to create a well-balanced agent, i.e., one that is not over-aggressive and thus allowing it to incur a degree of damage but is also sufficiently proactive to constantly seek to maximise his reward by trying to hit his opponent. The training is carried out using both images of the current state of the game and RAM values such as health, enemy health and score.

The agent could perform to a satisfactory level and can beat a decent number of levels on a small amount of training, when considering the complexity of the environment in which the agent operates. The agent tends to tackle any new characters more effectively if their move set would be similar to that of the original character against which the agent would have been trained. On the other hand, it tends to suffer when facing new characters with drastic changes in their move sets. This could be fixed by training against each character separately but this would defeat the purpose of adaptability.

Tests were also made to check the adaptability (generalisation) of these bots. The tests focused on how well agents performed against any characters that were not previously encountered. Moreover, the agent is only trained against two characters: the first character which the agent faces when training to beat the game and then the same character as the agent due to fighting the game against himself. Tests were also made to check how the agent would perform against different characters, since the agent would only be trained on one character.

Course: B.Sc. IT (Hons.) Artificial Intelligence

Supervisor: Dr Vanessa Camilleri