Claim verification is the task of determining the veracity of a claim. Typical artificial intelligence approaches attempt to support or refute a claim by comparing it to either authoritative sources (such as databases of previously fact-checked claims) or unstructured information (such as the Wikipedia corpus and/or peer-reviewed publications). However, this method faces many semantic challenges, where minor changes in wording could generate inaccurate results.

Another difficulty encountered by human fact-checkers, as well as by automatic systems, is that many claims would not have one straightforward, correct answer. The truthfulness of claims is usually given on a 5 or 6-point scale, where claims are not necessarily ‘True’ or ‘False’, but could also be ‘Half true’, ‘Mostly true’ or ‘Mostly false’. In fact, research has shown that the two most popular human fact-checkers ‒ Politifact and Fact Checker – only agree on 49 out of 77 statements [1].

This project proposes a novel approach towards tackling the aforementioned issues in the task of claim analysis, with the caveat that only a subset of claims could be analysed. For the purpose of this research, these are referred to as ‘statistically verifiable claims’ (SVCs). Such claims are considered statistically verifiable because they can be verified using statistical analysis, rather than by comparing the claim to other textual sources (which is a challenging task for automatic systems).

An SVC is made up of variables and events. Variables are quantitative entities that change over time such as, population, average income and percentage of unemployment. On the other hand, events are either changes in variables (e.g., increase in a variable), or relationships between variables (e.g., a causal event, where a change in one variable could bring about a change in another). For example, the claim by The Guardian that, “Global warming has long been blamed for the huge rise in the world’s jellyfish population” is a statistically verifiable claim, where there is a causal relationship between two variables “global warming” and “the world’s jellyfish population”.

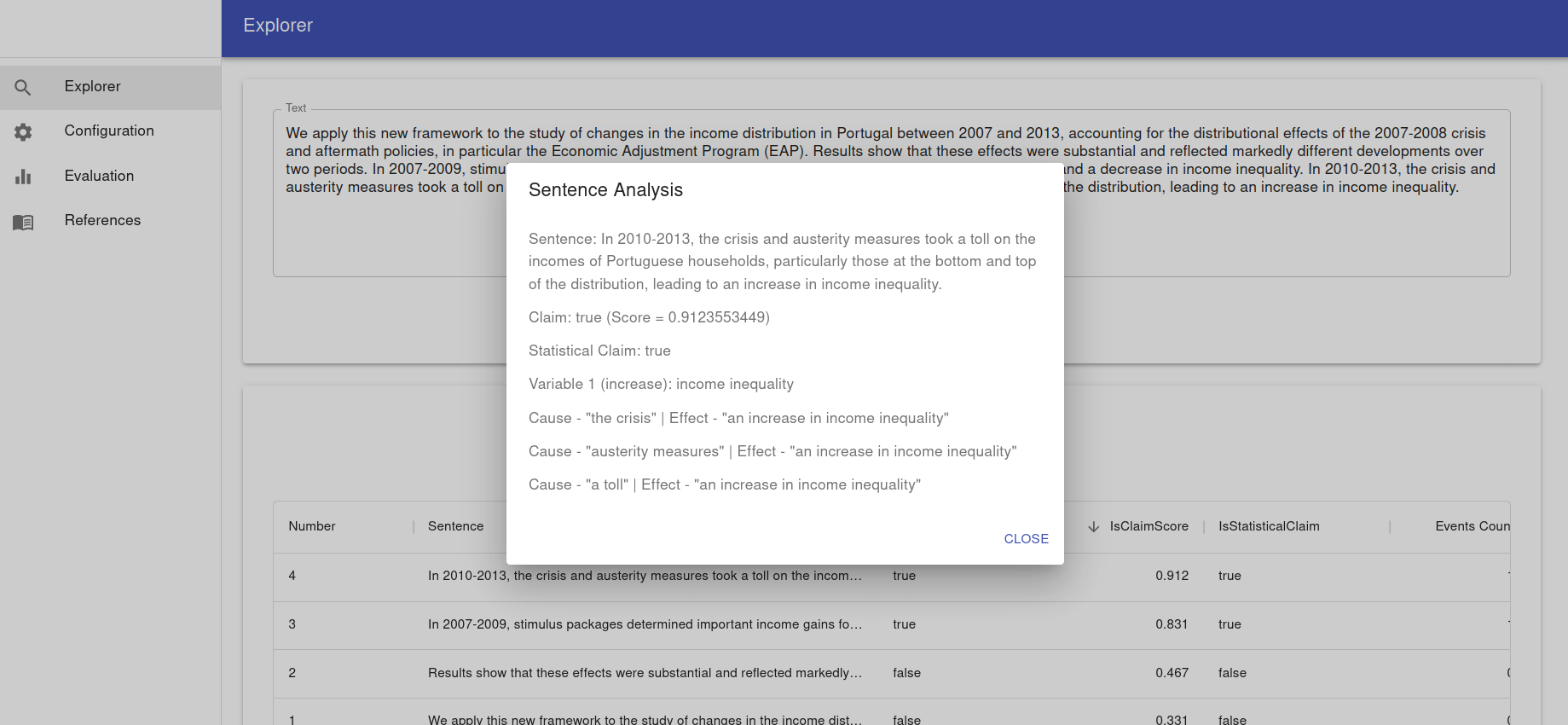

A solution was developed which given some text will first identify the claims and then analyse each one to determine whether it is statistically verifiable. Should this be the case, the variables would be extracted along with information about them (e.g., whether it indicates an increase or a decrease). All the causal relationships are subsequently extracted and, by using an ensemble system, the cause-and-effect events would be mapped to the variables, establishing relationships between them.

A further system was then utilised for obtaining time series data for the variables identified in the claim. The Eurostat dataset was used for this task, as it offers a large collection of data covering a broad range of aspects of EU Member States. Such time series data for each variable would allow for a statistical analysis to determine whether the data obtained supports or refutes the original claim. In this way, the SVCs would be binary (i.e., ‘True’ or ‘False’) without the possibility of a middle ground.

References/Bibliography

[1] C. Lim, “Checking how fact-checkers check,” Research and Politics, vol. 5, July 2018. Publisher: SAGE Publications Ltd.

Course: B.Sc. IT (Hons.) Artificial Intelligence

Supervisor: Dr Joel Azzopardi