Through his PhD, JOHN SOLER is developing an algorithm that will be able to automatically recognise daily activities of dementia patients, as well as share important information with their caregivers. If successful, this could be plugged into one of the Faculty’s most ambitious projects to date.

For the past four years, Saint Vincent de Paul (SVDP) residence has been at the centre of one of the Faculty of ICT’s biggest projects: the PEM (pervasive electronic monitoring) in Healthcare research project, which aims to improve the lives of people with dementia, and reduce the workload on their caregivers. To do this, researchers have been creating an algorithm that can, in lay man’s terms, understand what a person is doing through the crunching of data that is fed to it by on-body and environmental sensors, and then send instantaneous reports to caregivers if anything goes amiss. The process is a long one involving many steps and research specialities, and John Soler’s PhD thesis will be aiding with one of the most crucial parts.

“The idea behind the PEM project isn’t just to understand dementia better, but to give those who live with it more freedom to move about with added safety,” John explains. “Using Machine Learning, this system will be able to predict danger and inform caregivers about a variety of scenarios including whether daily meals have been consumed, whether the patient is wandering, and whether they have fallen down.”

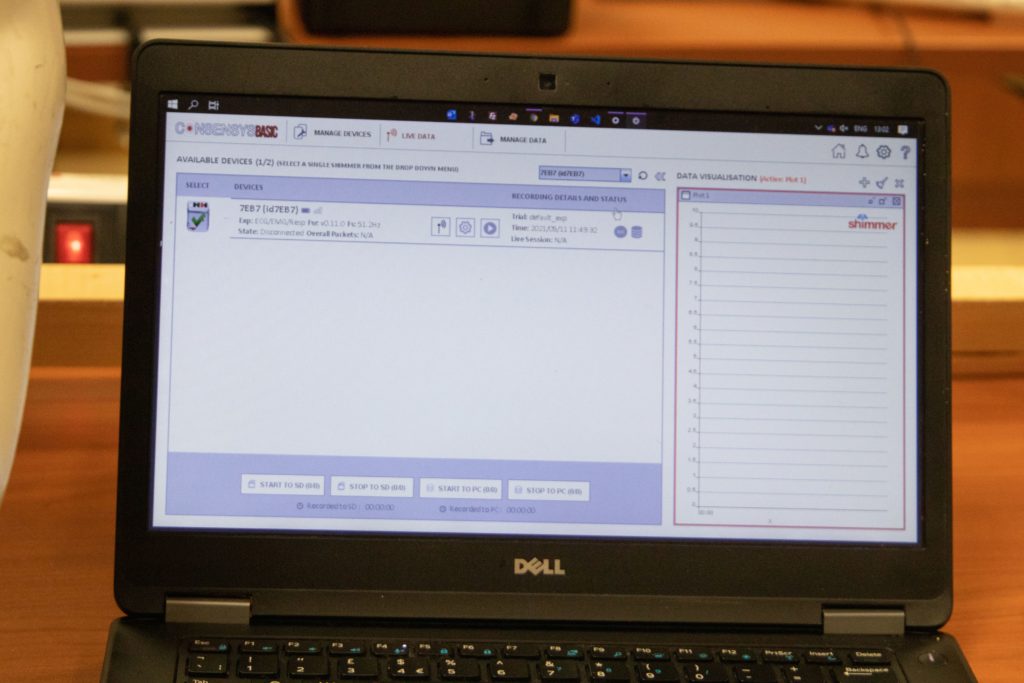

Such a system may sound straightforward, but it requires a lot of work even just to get it off the ground. For years, the team behind it has been working on finding the best type of sensors to use for this purpose, and has worked with volunteers to simulate different activities patients with dementia would do, such as walking aimlessly (wandering), walking with purpose, standing, lying down, sitting down, and a whole host of other actions.

As John explains, when such data is collected, it can be displayed in an Excel sheet-type of form, with each line representing different values from different sensors over an infinitesimally small period of time – just to give you an example, one fall could result in 1,200 data points.

“So the objective now is to develop an algorithm that can fuse the data from all the sensors and make sense of it automatically (as opposed to manually). Indeed, the algorithm I’m working on will extract patterns from the sensor data that the PEM research team has collected, and learn to recognise human activities accurately as they happen in real-time.”

Of course, this is just one building block in this ambitious project, yet it leads it one step closer to its final aim of being tested in real-life scenarios, and of being rolled out into the community and care residences. That will take years to do, but the benefits could be huge.

“A system like this could allow people with dementia to live at home and in the community for longer and without the need or the expense of having 24/7 help,” John says. “It could also give caregivers at institutions like SVDP more tools to take care of their patients, and decrease the response time in cases of patients falling, among other things.”

Indeed, beyond the technology and algorithms, this project is extremely human in essence, and creates a framework that could be used in a variety of situations where human beings require assistance.