This project set out to determine the extent to which music genre recognition (MGR) could be performed by evaluating different machine learning algorithms. The algorithms were applied to a

curated benchmark of audio tracks with corresponding genre labels, and compared to similar models documented in the literature.

Nowadays, we rely greatly on the applications we use for listening to music to discover (sometimes new) music that matches our taste. In the process, we allow algorithms to introduce us to new music genres that may be of interest to us. In view of this, it is especially important that these applications would be able to achieve a good understanding of our listening habits, taste in genre and connections with the performers, among other factors.

A musical piece has various characteristics by which it could be described. Hence, songs with similar characteristics could be organised together in a single class, referred to as a musical genre.

Although defining a genre is itself subjective, the musical genre is one of the most important descriptors of the songs themselves. The boundaries between one musical genre and another are not standard and are rather based on user perception. In fact, the genre labels that were chosen as targets for the training and testing of the algorithms used were extracted from user-submitted, albeit curated, labels precisely because there currently exists no official taxonomy of genres.

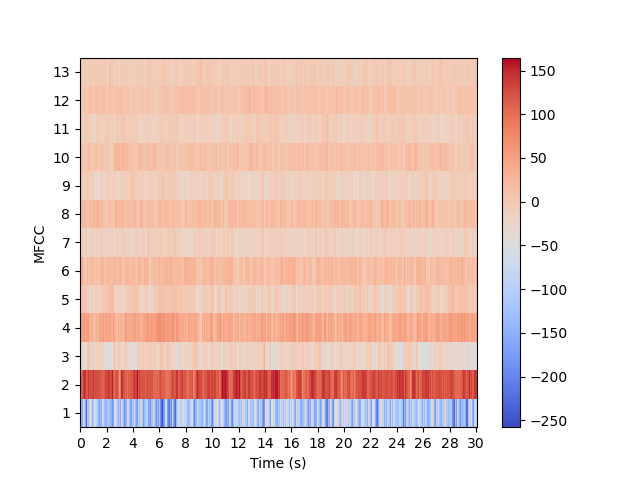

There are countless features that could be extracted from a digital version of an audio signal, such as the spectral centroid, zero-crossing rate and Mel Frequency Cepstral Coefficients (MFCCs), and each feature would be able to describe in a different way some property of the signal.

For this project, 13 MFCCs were extracted out of each audio track, as these coefficients were found to be among the best features to approximate the human auditory system, in that they can describe timbral features, as well as features related to frequency, rhythm and amplitude. Subsequently, the MFCC values were inputted into the different models used to perform MGR, which could predict the correct genre/s of a music track from among popular genre labels.

There are also a variety of machine learning models that could perform MGR. This project implemented and evaluated three among the most popular models, namely: an artificial neural network (ANN), a convolutional neural network (CNN) and gradient boosting machines (GBMs).

The ANN uses a model that is very loosely based on the human nervous system and is the earliest form of artificial intelligence to be developed, making it a very popular choice that is also widely used in this field. The CNN is a deep learning model that is used to classify images and similar three-dimensional data. The GBMs are a relatively modern model based on an ensemble of models offering a flexible application scope, and studies have shown that it is a promising technique for MGR.

Student: Jamie Buttigieg Vella

Course: B.Sc. IT (Hons.) Computing and Business

Supervisor: Mr Joseph Bonello