The main motivation for this study is to improve the quality of care given to mobility-restricted individuals in environment such as hospitals and other care facilities such as care homes for the elderly. Smart wheelchairs in this context can provide greater mobility and independence for wheel chair dependent persons and lessen the amount of manpower needed needed in the domain of indoor transportation of humans in a predominantly care context. Such technologies require automated indoor navigation systems. This paper will study the feasibility and efficacy of such a system.

In a real-world application of the developed system, a smart wheelchair or some similar device would be used. However, due to the limitations present, a simple robotic vehicle was constructed using a microcomputer along with a series of other plug-and-play apparatus. The system developed focuses on four main aspects: indoor location, indoor navigation, obstacle detection and lastly obstacle avoidance.

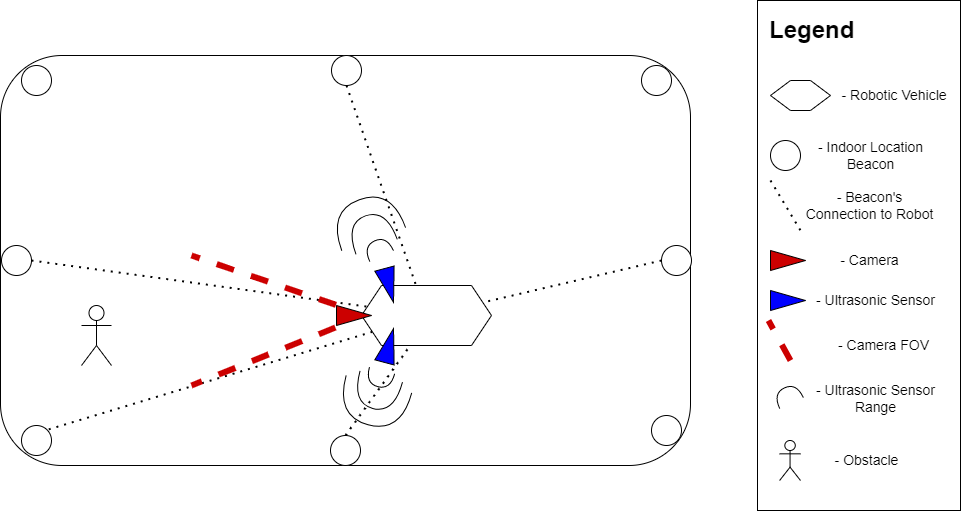

The Geo-Positioning-System (GPS) is not a suitable technology with regards to indoor environments. This is because of the drastic loss in accuracy that occurs due to the signal interference because of the infrastructure of the environment. To circumvent this, an alternative technology such as Wi-Fi or Bluetooth is needed to be used as indoor location beacons. The sole purpose of these beacons is to emit a frequency or a signal that would pertain to information about the location of the beacon. The microcomputer would then receive these signals and compute the location of the microcomputer relative to the beacons which are in the vicinity. The indoor navigation module uses the location module to calculate the direction and distance that the robot would have to travel to with regards to its own position as well as the indoor environment/the beacons. While the robot would be traversing from the starting point to the end point of any journey, the object detection and avoidance modules will be constantly examining the robot’s surroundings in order to mitigate the chances of collision.

In order to detect obstacles, the corresponding module will be split into two parts. The first and most important part uses a simple USB camera to ‘see’ whatever is in front of the robot, while the second would consist of ultrasonic sensors on either side as a pre-emptive measure for the camera’s blind spots. The camera sub-module uses filters such as the Chambolle-Pock denoising filter and Image Inversion in order to produce a Binary Object Map (BOM). The BOM is then used by the obstacle avoidance module to detect the edges of the object/s in the robot’s path and safely avoid them. The robot avoids obstacles detected by the camera by calculating the best angle change needed in order to circumvent any possible collision. The ultrasonic sensors are used to detect any objects which appear outside of the camera’s field of vision, and ‘warn’ the robot to slow down until the object is either in the camera’s view or has disappeared from the sensors’ detection zone.

A bus stopped at a bus stopped at a bus stop in SUMO simulator

Student: Andrew Magri

Course: B.Sc. IT (Hons.) Software Development

Supervisor: Dr Michel Camilleri