Artificial Intelligence (AI) can be used to tackle a wide variety of different fields and in this FYP the focus was to create a program that is centred around the concept of dynamically generated landscapes, where each user will be able to create their own personalised landscape based on their position and gestures performed. This is accomplished using artificial intelligence networks, terrain generation software and depth sensors.

The camera that is used allows for both depth and colour feeds to be obtained from it, which can then be used in different ways. The colour feeds obtained are primarily used to perform pose estimation using our AI networks.

One of the AI networks is used to obtain an estimated position of different body parts, the information obtained from this network allows us to find the user’s position in relation to our 3D world when combined with the depth information obtained from the depth feed from the camera.

The colour feed is at the same time also provided to a different AI network that can provide more accurate positioning of the user’s hands. The data obtained enables us to perform gesture recognition by passing the data to a trained neural network model which can detect different gestures performed by the user. Each gesture has an action associated with it, for example, if the user chooses to push down with an open palm, a small mountain will appear within the 3D world.

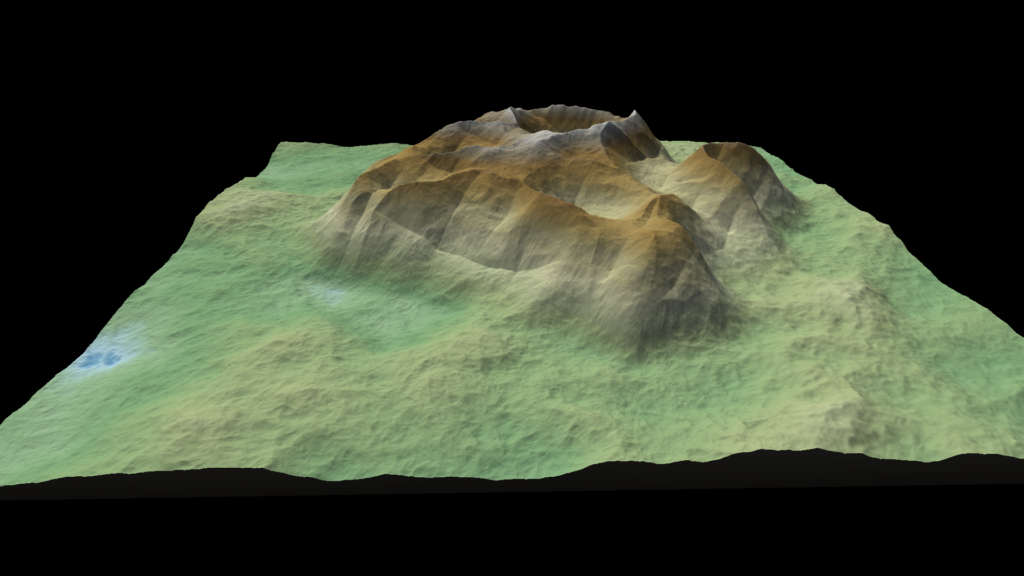

The 3D world is managed by terrain generation software that can be controlled using different Python APIs. The software uses different algorithms such as Perlin noise which allows for the creation of procedurally generated terrain which also increases the likelihood of each landscape being unique even if two different users perform the same gestures and movements.

Student: Luke Pullicino

Course: B.Sc. IT (Hons.) Artificial Intelligence

Supervisor: Dr Josef Bajada

Co-supervisor: Dr Trevor Borg