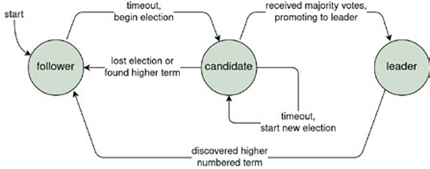

Testing systems designed to run in a distributed-network setting may be described as difficult at best. Writing test cases for all possible scenarios for such an architecture is virtually impossible, due to the random nature of the computer network. One must consider message delays, drops and duplications, network partitions and worst of all, random combinations of these events. Run-time verification (RV) may instead be considered to determine the correctness of a software system within such an environment. RV refers to the tracing of events and process flow by means of a ‘monitor’ to determine whether the observed behaviour is complicit with defined invariants. This is how Graft ‒ an Elixir implementation of the Raft consensus algorithm ‒ was evaluated. Raft is an algorithm used to manage replicated logs, i.e. an event log that is duplicated across multiple servers within a computer network. It achieves this by orchestrating a cluster of machines to operate as a coherent group, capable of tolerating the failure of ‘n’ participants within a cluster of 2n + 1 machines. The orchestrator of the cluster is the leader, a server within the group elected within a time period to have full control over accepting client requests, replicating entries, applying entries when it is safe and replying back to the client when an answer has been computed. If a server is not a leader, then it is either a candidate (and waiting to receive majority votes to become leader) or a follower.

The main contribution of this project is Graft ‒ an application that could be used to set up local or distributed Raft clusters with customisable state machines. Graft’s correctness is supported through a variety of techniques, namely unit/integration testing and, previously mentioned, RV. The latter was achieved by using detectEr, which is an outline-monitoring tool for the Erlang ecosystem. Monitors were attached to servers within the Raft cluster to trace occurrences of events during the system’s run- time. This ensured that the implementation adhered to the main invariants of the Raft algorithm. A total of four distinct monitor specifications were used to verify Graft, none of which found any violations for the invariants they represented.

Student: Matthew Alan Le Brun

Course: B.Sc. IT (Hons.) Computing Science

Supervisor: Prof. Adrian Francalanza