The General Data Protection Regulation (GDPR) [1] was implemented in May 2018, giving individual citizens the right to request the deletion of any personal data, including closed-circuit TV (CCTV) footage. Being generally installed in public spaces, such as shops, banks and in many streets, CCTV cameras are virtually ubiquitous. This makes the deletion of personal data from CCTV particularly problematic. Firstly, surveillance footage is required intact in the event that it might be called upon as forensic evidence in court. Secondly, obfuscating frames concerning a particular individual from a video would require substantial manual work, as the process would entail reviewing the entire footage, finding all the frames where the specific person or persons would be visible, and manually obfuscating them.

Semi-automated video redaction tools are currently available commercially. Among others, IKENA Forensic and Amped FIVE software packages [2, 3] allow the user to specify the area of interest to be obfuscated. With the use of semi-automated tracking techniques, the object

of interest is followed throughout the footage. Although this tool facilitates the process, the identification of the person of interest would still necessitate the manual scanning of the video, which is a highly time-consuming task. Moreover, another major issue with the above- mentioned tools is that the licence to use them costs thousands of euros.

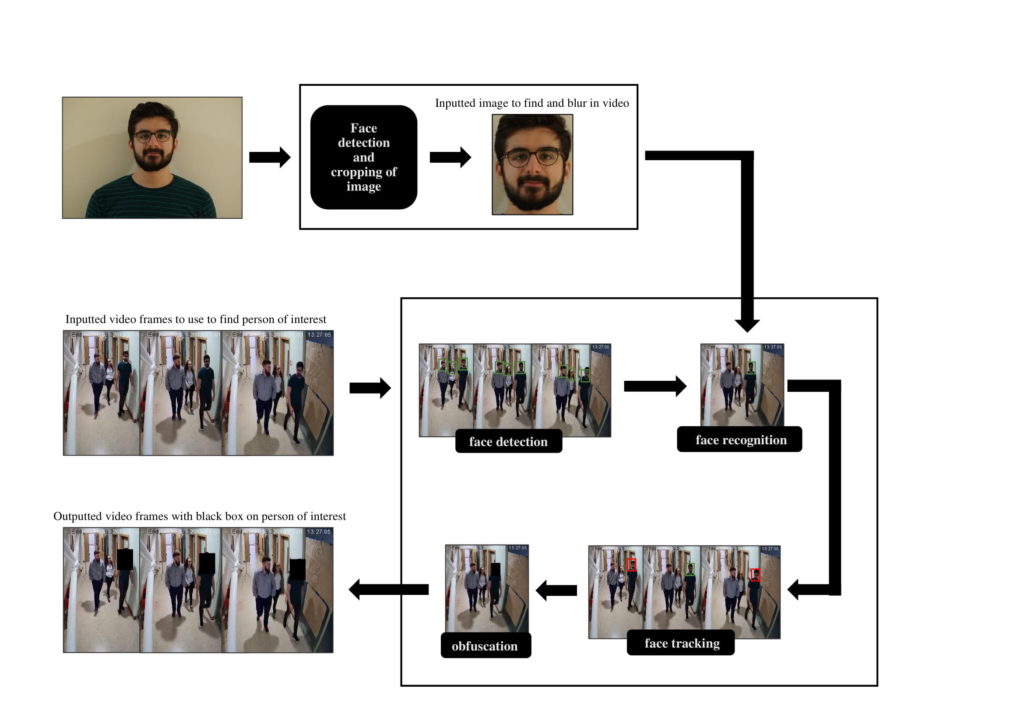

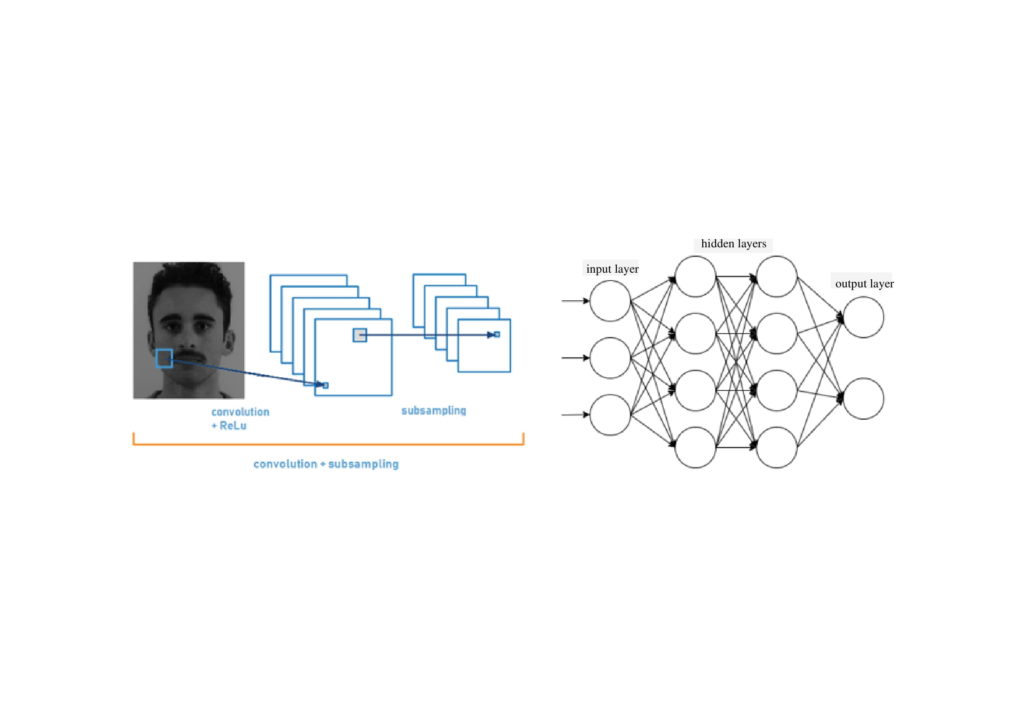

This final-year project involves the design and implementation of an open-source automated video redaction method that can automatically detect the face of the person or persons of interest in the video footage, and obfuscate them throughout the same footage. Deep learning was used to detect [4] and recognize [5] the object of interest, in preparation for face obfuscation. Dense optical flow [6] was subsequently used to track the face of the person of interest within the video, which was then obfuscated. This method achieved an accuracy higher than 90% in an indoor environment.

A video demo of the project can be found through the following link: https://youtu.be/1SeYgnt1Ny8

References/Bibliography:

[1] European Parliament, Council of the European Union. Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data, and repealing Directive 95/46/EC (General Data Protection Regulation), 2016

[2] IKENA Software. “MotionDSP – Spotlight Software.” motiondsp.com. https://www.motiondsp.com/ (accessed May 6, 2020).

[3] Amped Software. “Amped FIVE | Forensic Video and Image Enhancement.” ampedsoftware.com. https://ampedsoftware.com/five (accessed May 6, 2020).

[4] J. Redmon and A. Farhadi. YOLO9000: Better, Faster, Stronger, 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, 2017, pp. 6517-6525, doi: 10.1109/CVPR.2017.690.

[5] Omkar M. Parkhi, Andrea Vedaldi and Andrew Zisserman. Deep Face Recognition. In Xianghua Xie, Mark W. Jones, and Gary K. L. Tam, editors, Proceedings of the British Machine Vision Conference (BMVC), pages 41.1-41.12. BMVA Press, September 2015.

[6] Aslani, Sepehr & Homayoun Mahdavi-Nasab. Optical Flow Based Moving Object Detection and Tracking for Traffic Surveillance, 5th Iranian Conference on Electrical and Electronics Engineering, 2013.

Student: Dejan Aquilina

Course: B.Sc. IT (Hons.) Computer Engineering

Supervisor: Dr. Ing. Reuben Farrugia