The automated analysis and inference of human behavioural traits by machine interfaces is a growing field of research. On the other hand, research regarding the study of deceptive traits and classification is relatively scarce. It has been established by various studies [1, 3] that the augmentation of multi-modal information (e.g. acoustic analysis of speech and visual lip-reading) could enhance the performance of speech recognition systems traditionally geared exclusively towards acoustic data. Similarly, the analysis of speech augmented with human body language and facial patterns could help provide information on traits such as emotional state, or whether a speaker is trying to deceive an audience or interlocutor.

Deception is an intriguing concept due to the fact that it is often misconceived by humans. As with sarcasm, understanding deception requires experience, yet there is no absolute and objective metric to determine whether someone is being deceptive. Sen et al. [1] developed an automated dyadic data recorder (ADDR) framework, which led to the first comprehensive deception database composed of audio-visual interviews between witnesses and interrogators. Although this was not the first work in the field, previous datasets were either not accessible or not optimised for machine learning (ML) techniques and tasks [1].

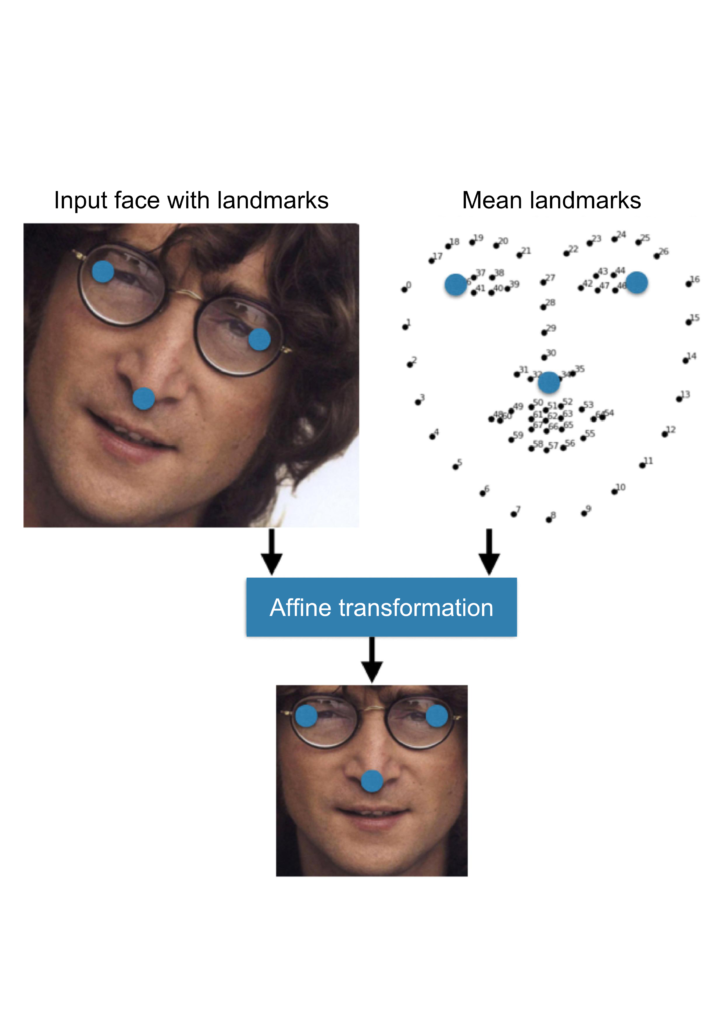

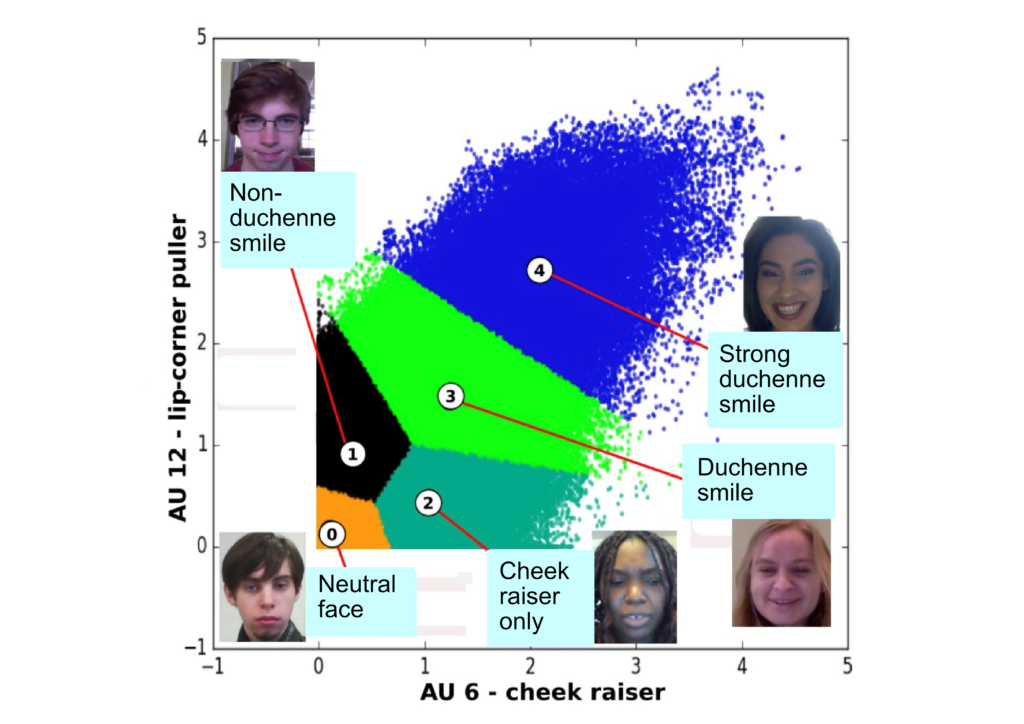

This project investigates various applications of ML techniques in attempting to detect deceptive actions and encapsulate those traits. Various ML models and hyperparameters were explored within the hyperparameter space with Bayesian optimisation tuning. The utilisation of the best performers played a very important role in producing the final classifier. The proposed system is composed of a deep neural network with recurrent long- short-term memory (LSTM) cells. It is fed with aligned audio and OpenFace [2] features.

This study shows that a machine can capture and analyse deceptive traits at an adequate degree of confidence whilst achieving an AUC ROC score of 0.607. The existence of such an ML classifier suggests that there exists some pattern or latent model that defines deceptive actions.

References/Bibliography:

[1] T. Sen, M. K. Hasan, Z. Teicher, and M. E. Hoque, “Automated dyadic data recorder (ADDR) framework and analysis of facial cues in deceptive communication,” 2017.

[2] Brandon Amos, Bartosz Ludwiczuk, and Mahadev Satyanarayanan. Openface: A general-purpose face recognition library with mobile applications. Technical report, CMU-CS-16-118, CMU School of Computer Science, 2016.

[3] T. Sen, M. Hasan, M. Tran, Y. Yang, and E. Hoque, “Say cheese: Common human emotional expression set encoder and its application to analyze deceptive communication,” pp. 357–364, 05 2018.

Student: Braden Refalo

Course: B.Sc. IT (Hons.) Artificial Intelligence

Supervisor: Dr. Andrea De Marco

Co-supervisor: Dr. Claudia Borg