A light field array consists of multiple cameras that capture different views of the same scene at the same time. The frames captured can be merged together to form a basic 3D model of the captured object. Frame synchronization is necessary to ensure that all the cameras capture the scene at the same instant.

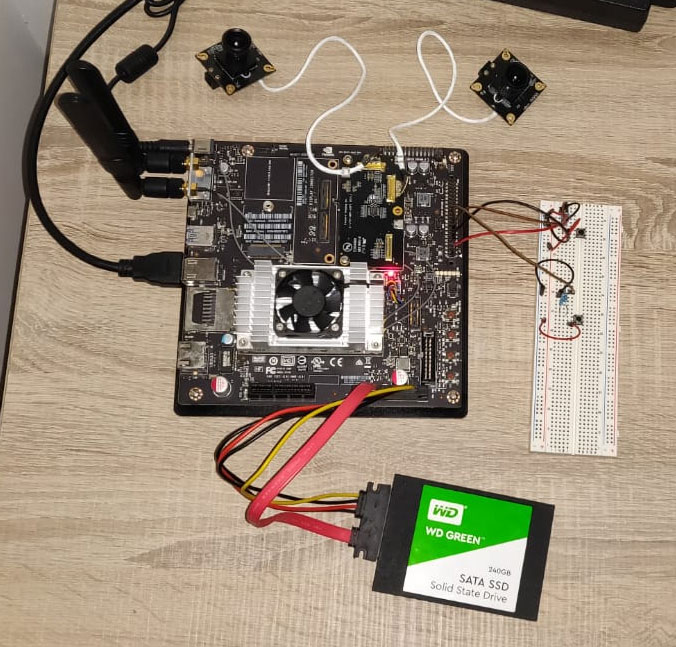

The aim of this project was to implement a multi camera system based on an NVIDIA Jetson TX2 microcontroller. The CMOS cameras used were from Leopard Imaging, where the MIPI interface was used to trigger the cameras. The available cameras and adapter board do not support frame synchronization in hardware, so the aim of this thesis was to minimise the timing discrepancy between the cameras as much as possible.

Several tests were conducted to compare alternative ways to trigger the captures, to ensure that the final system is functioning correctly, and to determine the synchronization error between the frames obtained from each camera. Furthermore, a user interface with physical buttons was implemented, making the system portable and user friendly. This was implemented by making use of the GPIO module provided on the development kit of the microcontroller.

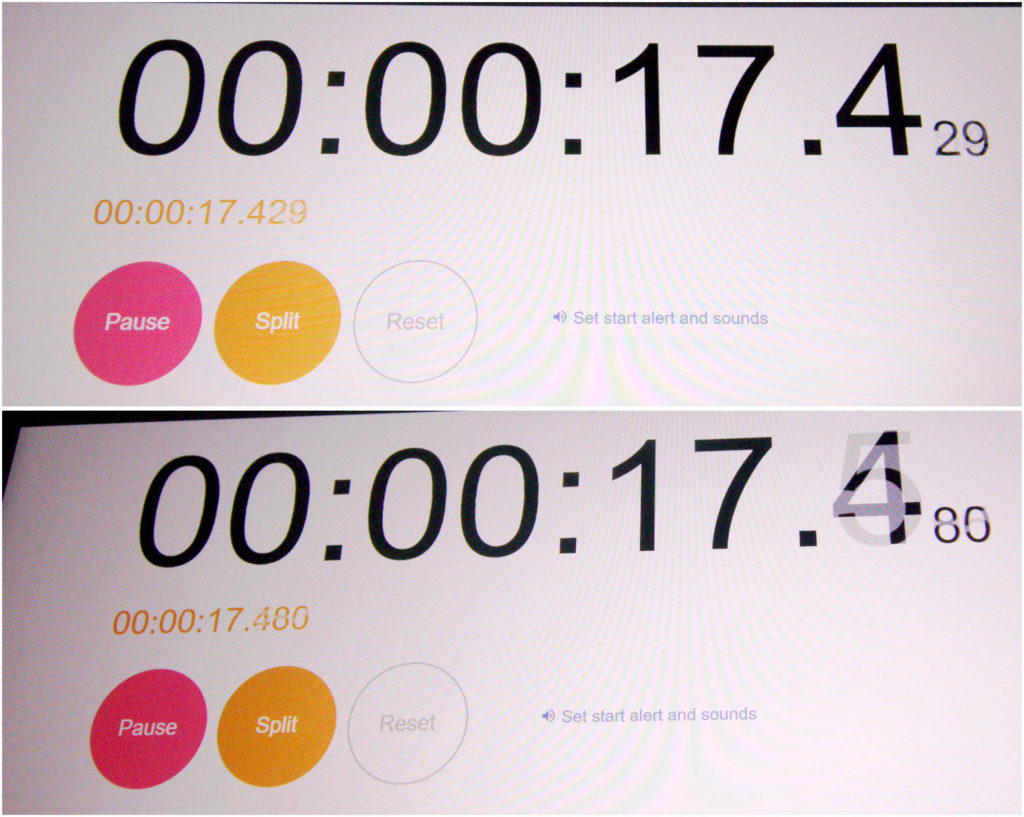

The tests were performed by capturing frames of two types of clock animations. First, images of an analogue clock were captured, where the discrepancy between the frames was indicated by the difference in the position of the second hand. The smaller the difference between the position of the second hand, the smaller the synchronization error, hence a better synchronization between the camera modules. Another set of tests were conducted by capturing frames of a digital stopwatch. In this case, the synchronization error between the cameras could be obtained by calculating the difference between the times recorded in the frames. Again, the smaller the difference in time between the captured frames, the better the synchronization. Furthermore, when possible, the software also tracked the kernel timestamps of the captured frames. The difference between the recorded timestamps is equal to the synchronization error between the cameras. Each test was performed multiple times (up to ten times) and an average synchronization error calculated.

References/Bibliography:

[1] “Light fields and computational photography”, Graphics.stanford.edu, 2020. [Online]. Available: http://graphics.stanford.edu/ projects/lightfield/.

Student: Kyle Borg

Course: B.Sc. IT (Hons.) Computer Engineering

Supervisor: Prof. Johann A. Briffa