This project aims at developing a sensor glove that could process hand gestures and translate them into the corresponding alphabetical letter according to the American Sign Language (ASL). This would allow a deaf person to communicate using the said sign language alphabet to convey a message, without requiring the person at the receiving end to be conversant with ASL.

The glove incorporates a number of sensors sensitive to the smallest degree of the bending of each finger. Flex sensors were the optimum choice for this function in view of their long lifespan, durability and ease of integration, given that their resistance changes in proportion to the bending of the sensor [1]. Ten flex sensors were used for this project to ensure that any bending in the two main joints of the fingers could be detected.

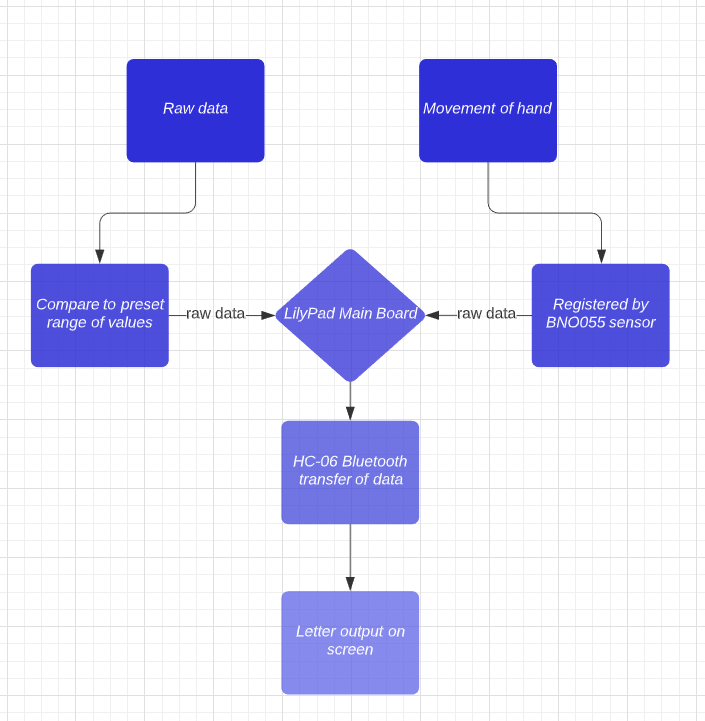

The glove would also be required to detect hand orientation. For this purpose, the BNO055 sensor was used. This enables the system to distinguish between two letters that employ the same finger formation, but with a different orientation. The same sensor was also used to detect any linear acceleration caused by the signing of an arch, which represents the repetition of a letter. The BNO055 was chosen since it interfaces seamlessly with the LilyPad Arduino Main Board microcontroller. Using the appropriate software libraries, the BNO055 would provide the necessary orientation and linear acceleration values, which are then processed by the LilyPad [2].

The code was executed on the LilyPad microcontroller and translated the sensory data into the corresponding alphabet character, which was output on the Arduino IDE console. The data transmission from the LilyPad to the PC was handled by the HC-06 Bluetooth module, whereas power was provided by an armband-mounted battery, thus ensuring wireless operation.

In order to test the system accuracy, the glove was used to display each of the twenty-six letters for ten times. Nineteen out of the twenty-six letters were displayed correctly without any delay or false reading.

Three letters ‒ ‘C’, ‘P’ and ‘W’ ‒ were incorrectly detected 10% of the time, whereas the letter ‘J’ was incorrectly output as the letter ‘I’ four out of ten times. There were also nine instances where the output letter (‘I’, ‘U’, ‘V’ and ‘W’) was delayed by a few seconds until the fingers attained the predetermined formation. Most of these issues could be attributed to slight movements of the flex sensors relative to the glove fabric, resulting in variations in the angle of the bend even when the same letter was being signed.

References/Bibliography:

[1] “Flex Sensor Datasheet,” SpectraSymbol, [Online]. Available: https://www.sparkfun.com/datasheets/Sensors/Flex/flex22.pdf. [Accessed 12 December 2019].

[2] Bosch, “BNO055 Intelligent 9-axis absolute orientation sensor,” Bosch Sensortec, November 2014. [Online]. Available: https://cdn-shop.adafruit.com/datasheets/BST_BNO055_DS000_12.pdf . [Accessed November 2019].

Student: Lauri Anastasia

Course: B.Sc. IT (Hons.) Computer Engineering

Supervisor: Prof. Ivan Grech

Co-supervisor: Prof. Ing. Victor Buttigieg