With the rise of digital media, more and more individuals are turning to online sources for their daily news. However, the sheer volume of video content available could be overwhelming. To counteract this, automatic analysis of news videos using artificial intelligence (AI) techniques could be applied, thus delivering relevant information to viewers in less time.

This project consisted in developing a program that would be able to analyse news videos automatically by employing computer vision techniques to extract information on individuals, including their names, duration, and timestamps. The program was designed to generate a timeline report based on the extracted data.

One of the key features of this program is its ability to learn new faces on the fly, continually updating its facial-recognition database. Additionally, it would allow users to input a custom facial database, allowing the program to match with existing or new faces right from the start. During analysis, the program would also extract the names of the individuals appearing in the news video, so that each face could be paired with a name.

The program also sought to include a user-friendly interface that would allow users to input news videos and generate reports easily. Additionally, users could conveniently export customised facial databases through the same interface, for future use.

The accuracy of the program was tested using a variety of news videos extracted from the TVM website. The program-analysis results were compared to manually annotated videos in order to determine the accuracy and efficiency of the program. This was done to ensure that the program was performing as intended, and providing accurate and comprehensive information.

The proposed program promises to constitute a significant contribution to media professionals and researchers who rely on accurate and comprehensive information from news videos. By automating the analysis of news videos, this program could help reduce errors, save time, and enable more informed decision-making in a variety of contexts.

Figure 1. Sample program analysis frame that includes: scene number, identified name (‘Matthew Balzan’), current facial recognition match, and face location indicated through a bounding box; here the facial-recognition process has successfully matched the identified name with the corresponding face

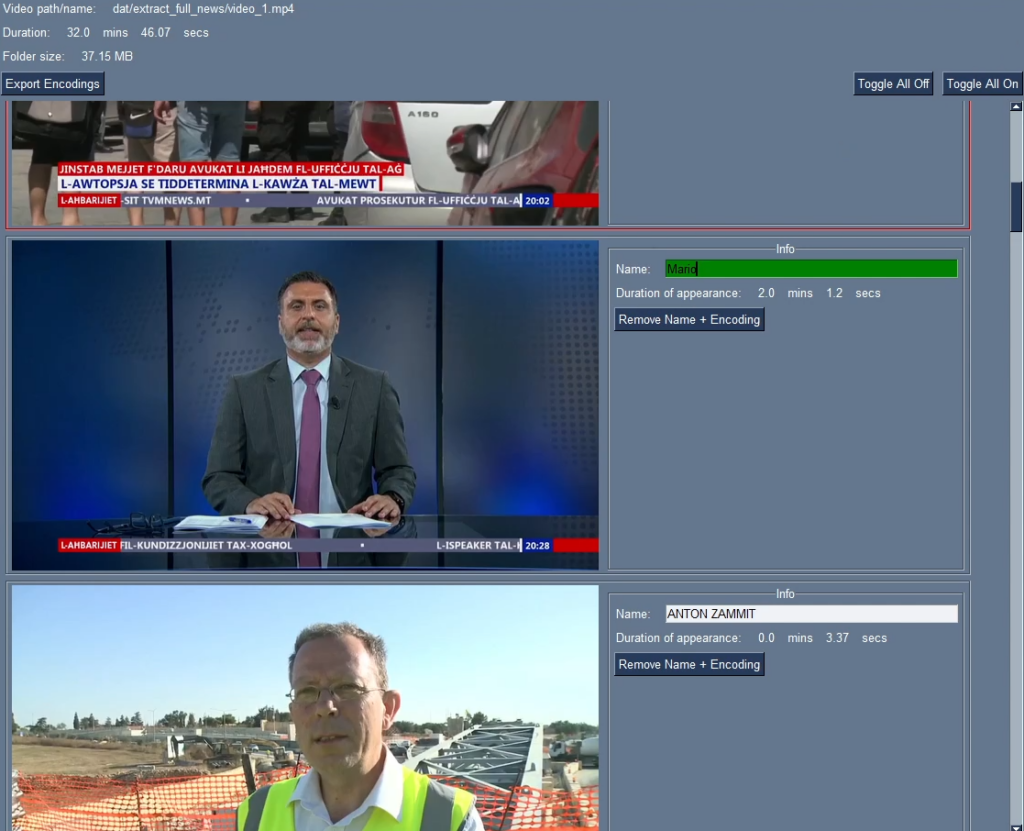

Figure 2. The results of a video analysis, including the video’s duration and the time durations during which ‘Mario’ (2 minutes and 12 seconds) and ‘Anton Zammit’ (3 seconds) are visible; the ‘Export Encodings’ tab allows users to export a face database for any individuals identified in the analysis

Student: Jonathan Attard

Supervisor : Dr Dylan Seychell