With the increasing prevalence of film and video games, more and more crossovers have occurred between the two digital forms. One challenge has always been to devise a method that would procedurally generate a relevant game, given any selected film. Advancements in artificial intelligence (AI) technology could provide a solution to a problem which, to date, may have seemed insurmountable.

The aim of the project was to test the above hypothesis and to confirm the supposition that, given the appropriate resources, it would be possible to develop an application that would fully convert any inputted film into a representative video game. The project provides a generalised presentation of how, relying on computer vision principles [1][2][3] and audio recognition techniques, main characters and soundtracks could be dynamically extracted from films and adopted to produce a relevant video game. This project proposes a system that would scan through a user-selected film, extracting the characters [3], enemies, allies, backgrounds and music scores with a view to adapting a film into a game in real time, thus offering a tool that would create a relevant game with as little end-user involvement as possible.

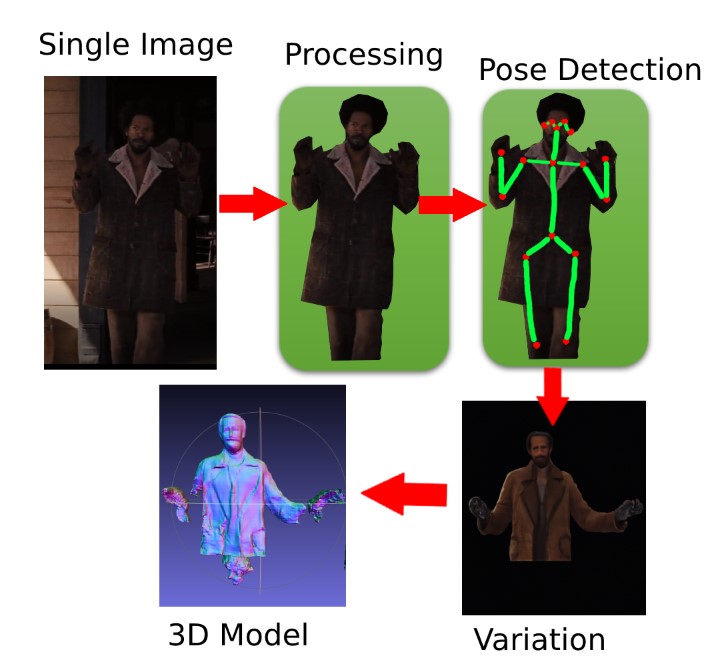

The method devised entailed: taking slices from the chosen film for the purpose of extracting assets; identifying and recompiling music tracks; selectively choosing clear and uncluttered backgrounds; and detecting and identifying main characters. It was possible to identify the main characters accurately over 90% of the time. Subsequently, through preprocessing, it was possible to generate 3D models, which formed the basic assets for the video game.

The main outcome of this experiment was that the above-mentioned hypothesis was proven correct, in essence. Hence, this project proposes a new approach that could be used by game developers to improve the quality and efficiency of their work. The main limitations in the system were observed when it was tested on less-than-ideal silhouettes during the model creation process, which resulted in noise in the output.

Figure 1. The pipeline that converts the main character to a 3D model

Figure 2. Deriving the main character from appear frequency

Student: Matteo Sammut

Supervisor: Prof. Alexiei Dingli